ABCDEFG… LEMME Unravel Kourtney Kardashian's Latest Supplement fad

Slapping "GLP-1" on a label doesn't magically make it a fast track to weight loss: A guide for spotting weak and shady statistics behind supplement claims

With prescription weight loss drugs, particularly those mimicking GLP-1, proving to be effective and highly sought after for treating obesity and reducing other health risks, it was only a matter of time before overpriced, overhyped, and scantily researched supplements would jump in, claiming to be the “natural” alternative. Enter Kourtney Kardashian’s latest venture, LEMME’s GLP-1 Daily capsules, doing what they do best: cashing in on insecurities, spreading them to the masses, and banking on a general lack of understanding about how these compounds actually work (and sadly, how to properly evaluate a study) — especially the difference between a supplement and a real prescription drug. The company claims its supplement can boost the body’s natural GLP-1 (that doesn’t really matter), reduce hunger and support healthy weight management (those are bold claims).

But LEMME break it down for you: LEMME’s supplement isn’t likely to do much at all, and maybe more importantly, lacks safety data. This two-part series will first explore the rigorous clinical trials behind FDA-approved weight loss drugs and highlight why the "trials" cited by supplement companies are insufficient to support their claims. In short, the results are largely clinically irrelevant (meaning, they don’t make much of a difference) and likely driven by chance. In the second part, we’ll examine the underlying mechanisms, discuss how proper trials should be conducted, and address the challenges in obesity medicine that these supplements tend to ignore. Stay with me.

How do GLP-1 drugs work?

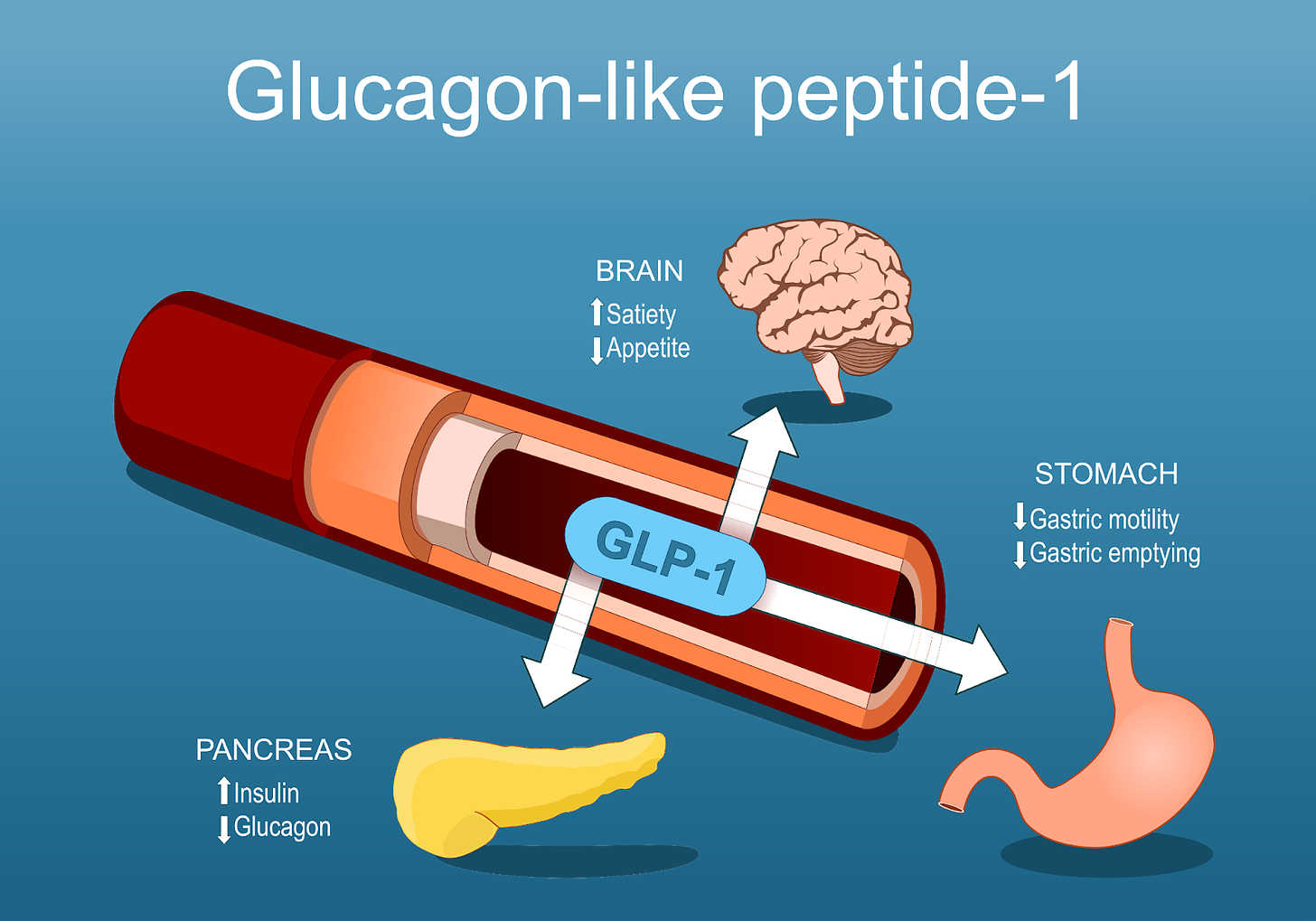

To understand this scam, it’s helpful to have a basic idea of how GLP-1 drugs work. The proper (full) name for this class of drugs is glucagon-like peptide-1 (GLP-1) receptor agonists (GLP-1 RAs). Breaking this down further, this means that these drugs act on the receptors for GLP-1 to induce a signal that, in this case, reproduces the normal effect of GLP-1 (we will get into why your body’s own GLP-1 isn’t enough in this case a bit later). These receptors are expressed across numerous cells of the body, including some unexpected sites, but for the purposes of this discussion we can say that GLP-1 receptors are found in the pancreas, brain, and stomach. Two main sites produce GLP-1 in the body: the intestine (specifically L cells) and certain neurons in a region of the brain called the nucleus of the solitary tract (we can just say intestine and brain to keep things simple).

When GLP-1RAs are administered, they signal through the receptors, the following occur:

Enhanced Insulin Secretion: GLP-1 medications stimulate the pancreas to produce more insulin in response to food intake, helping to lower blood sugar levels.

Decreased Glucagon Release: These drugs prevent the release of glucagon when blood glucose levels rise beyond fasting levels. This helps to ensure blood glucose levels remain controlled.

Slowed Gastric Emptying: GLP-1 medications slow down the stomach's emptying process, reducing hunger by promoting a sense of fullness. This may also cause nausea in some people.

Appetite Suppression: They influence the brain's appetite-regulating centers, helping to decrease cravings and overall food consumption.

Why Supplements Like LEMME’s GLP-1 Daily Are Problematic: Aside from the misleading claims, LEMME's “GLP-1 Daily,” unlike FDA-approved drugs such as Ozempic, which require prescriptions and medical oversight, is marketed and sold without such controls. Despite claims that the three patented bioflavonoids in LEMME’s “GLP-1 Daily” have been "tested in humans," there's little solid evidence supporting their effectiveness, especially in this specific formulation. Testing ingredients independently in poorly designed studies isn’t evidence and slapping "GLP-1" on a label doesn't magically make it a fast track to weight loss. If it were that simple, the pharmaceutical industry wouldn’t have spent decades developing stable GLP-1 analogs as the natural form breaks down too fast in the body to be effective.

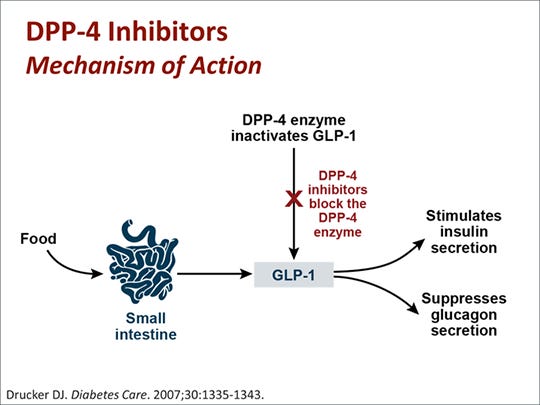

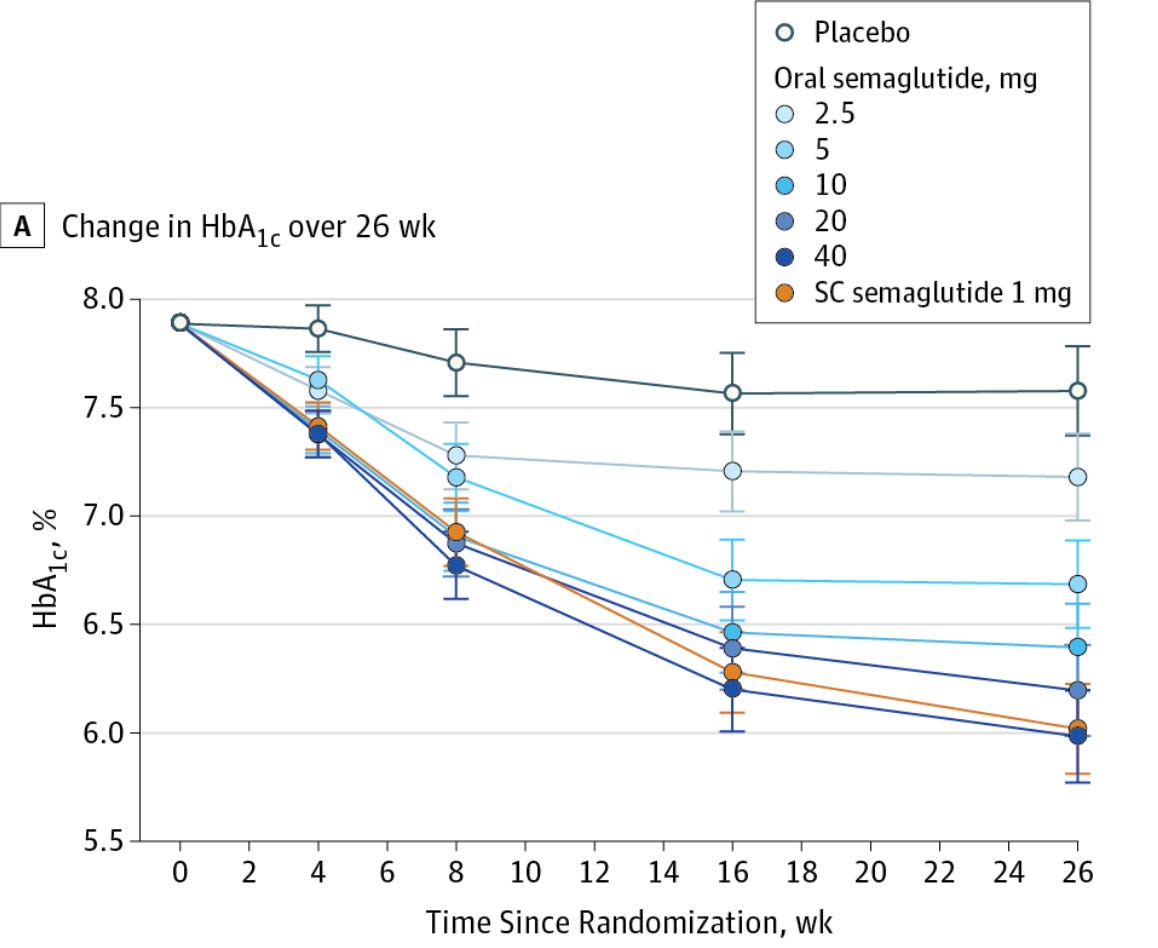

We know this because we have multiple drugs that do increase the normal levels of GLP-1 in the body and none of them lead to significant weight loss. For example, one class of antidiabetic medications, DPP4 (dipeptidyl peptidase 4) inhibitors, aka gliptins, prevent the breakdown of GLP-1 and GIP by DPP4. This leads to increased levels of the hormones in the body, allowing them to have their incretin effect (increase insulin secretion). This results in more rapid control of the rise in blood sugar after eating, which has metabolic benefits. Despite this, weight loss does not meaningfully occur. Even the oral form of semaglutide, under the brand name Rybelsus, has a minimal effect on weight loss; Novo Nordisc is currently examining a much higher dose of the drug for the purpose of weight loss with some early results that are promising. By itself, this does not mean that Kardashian’s gummies cannot work- it is hypothetically possible that they cause weight loss by a mechanism other than GLP-1 receptor agonism. However, given that she is using GLP-1 as the brand name, we should approach it with appropriate skepticism- it is simply not plausible that meaningful weight loss would occur from a supplement that raises or claims to raise the body’s own GLP-1 levels. GLP-1 itself has a half-life in the body of just 2 minutes- it would take extraordinary increases in the levels of the medication to get doses that cause weight loss- something that so far has been achievable only by modifying GLP-1 to have a much longer half-life.

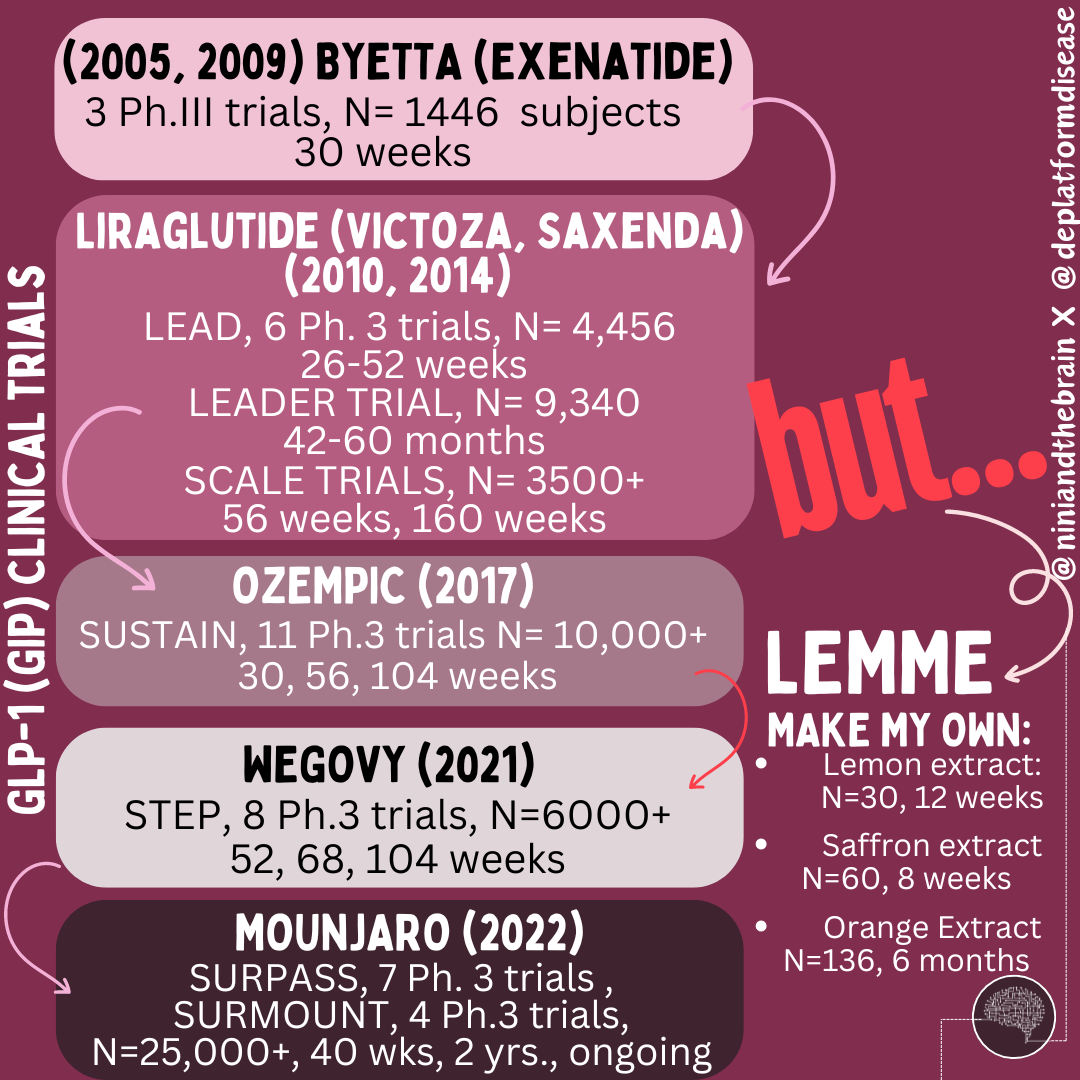

Pharmaceutical companies developed GLP-1 receptor agonists only after decades of research. For example, exendin-4, a molecule mimicking GLP-1, was discovered in the 1980s in the venom of the Gila monster and led to the development of Byetta, approved in 2005 after extensive trials.

After exenatide (Byetta) was approved as an adjunct therapy it would be another 4 years until FDA approval as a monotherapy in 2009. Liraglutide (Victoza) was approved in 2010, and a higher-dose version (Saxenda) received approval in 2014 specifically for weight management in individuals with a BMI of 30 or more, or a BMI of 27 or more with a weight-related health condition. More recently, semaglutide (Ozempic) was approved in 2017 for type 2 diabetes and in 2021 for weight management (Wegovy) in the United States. GIP receptor agonists, often combined with GLP-1 receptor agonists (as in the dual agonist tirzepatide, approved under the brand name Mounjaro in 2022), went through similar extensive development and trials. Tirzepatide's approval was based on results from the SURPASS clinical trial program, which included over 25,000 patients across multiple studies and is still ongoing.

Furthermore, GLP-1 and GIP receptor drugs are large molecules that must be injected because they are not well absorbed in the gut. Attempts to create oral versions, like Novo Nordisk's Rybelsus, required high doses to be effective. Small-molecule drugs targeting GLP-1 receptors, such as Pfizer’s Danuglipron and Eli Lilly’s Orforglipron, are in development but face challenges like gastrointestinal side effects. While oral semaglutide (Rybelsus) is taken as a pill, Ozempic and Wegovy are administered as an injection under the skin. Rybelsus is taken at the same time every day, while injectable semaglutide is taken once a week. It is egregious to think LEMME figured out an oral administration route that is effective.

The Rigorous Journey of GLP-1 Receptor Agonists

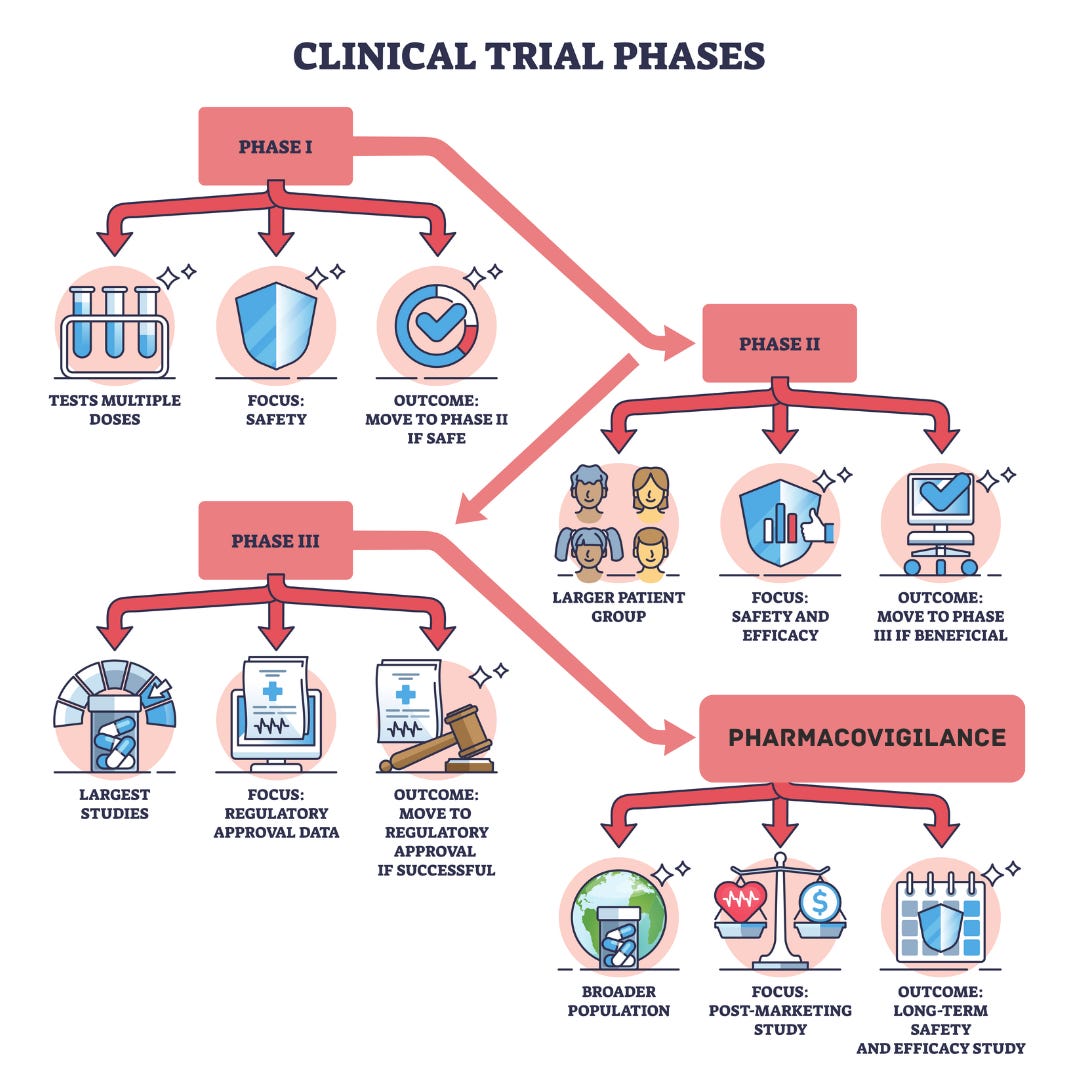

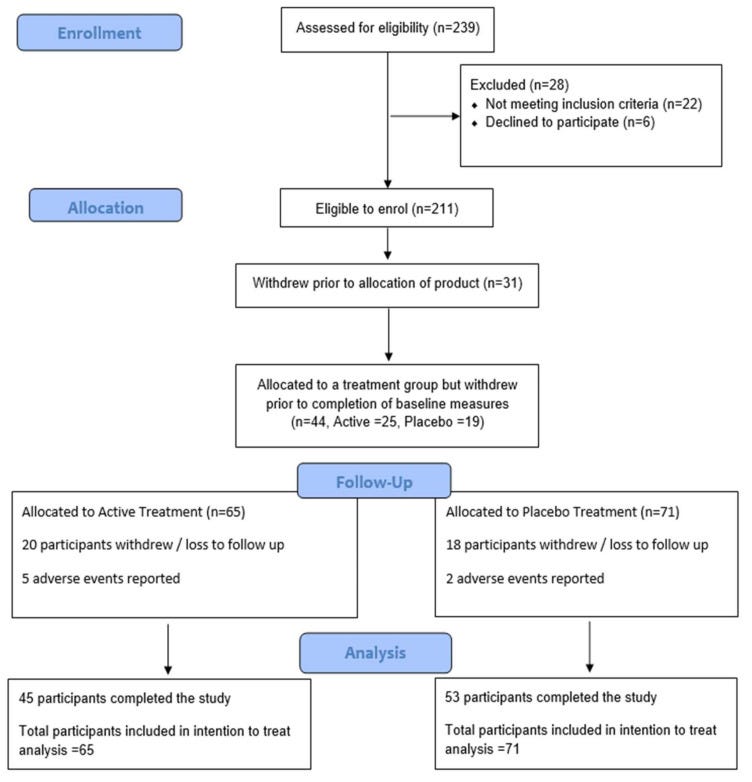

The development and approval of drugs like Byetta, Victoza, Saxenda, and Ozempic involved decades of research and multiple phases of clinical trials with thousands of participants to determine their safety, efficacy, dosing, and long-term effects. The development of GLP-1 receptor agonists involved a rigorous process of chemical modification to create stable, long-acting analogs that could be administered via injection or orally. Each step required demonstrating substantial weight loss and improved glycemic control compared to placebos or other treatments, which supplements like LEMME's have not undergone.

Each phase of development evaluates a different endpoint, from safety, tolerability, and pharmacokinetics in pre-clinical and Phase I, to optimal dosage and preliminary efficacy while monitoring side effects in Phase 2, leading into Phase 3, aimed to confirm efficacy and monitor adverse effects over an extended period in much larger subpopulations. Even after the approval, extensive safety monitoring continues through post-marketing surveillance (Phase 4). For example, the FDA required a risk evaluation and mitigation strategy (REMS) for Saxenda to monitor for potential risks of medullary thyroid carcinoma and pancreatitis, conditions observed in rodent studies.

The journey from discovery to approval of GLP-1 receptor agonists involved over two decades of research, with extensive preclinical and clinical trials (see footnote for details)and, as you will see, rigorous statistics to analyze the data.

What about LEMME’s GLP-1 Daily Capsules? Let’s discuss.

It is described as "an all-natural supplement with no known side effects, powered by 3 clinically-tested ingredients: Eriomin® Lemon Fruit Extract, Supresa® Saffron Extract, and Morosil™ Red Orange Fruit Extract.*" The company has not conducted ANY clinical trials of the supplement per se, but rather cites small studies of these individual ingredients, neglecting all together issues of bioavailability (solubility, stability, form, permeability, things we will discuss in part 2) and pharmacokinetics (ADME: absorption, distribution, metabolism, and excretion- how the drug is taken up by the body, how it moves one inside the body and gets to its target site, how the body alters the drug, and how the body gets rid of the drug), among others.

Spoiler: Between small sample sizes, short duration, measuring wrong outcomes (GLP-1 ain’t it) and the weak, clinically irrelevant ones (negligible, perhaps non-existent weight loss or glycemic control) the impact of the intervention (likely none) is blurred into oblivion by widely overlapping uncertainties (confidence intervals). Plus, based on the data, there are strong indications that some of it may be fabricated (details below).

But first, some basic clinical trial statistics (skip ahead to the debunk if you know this already)

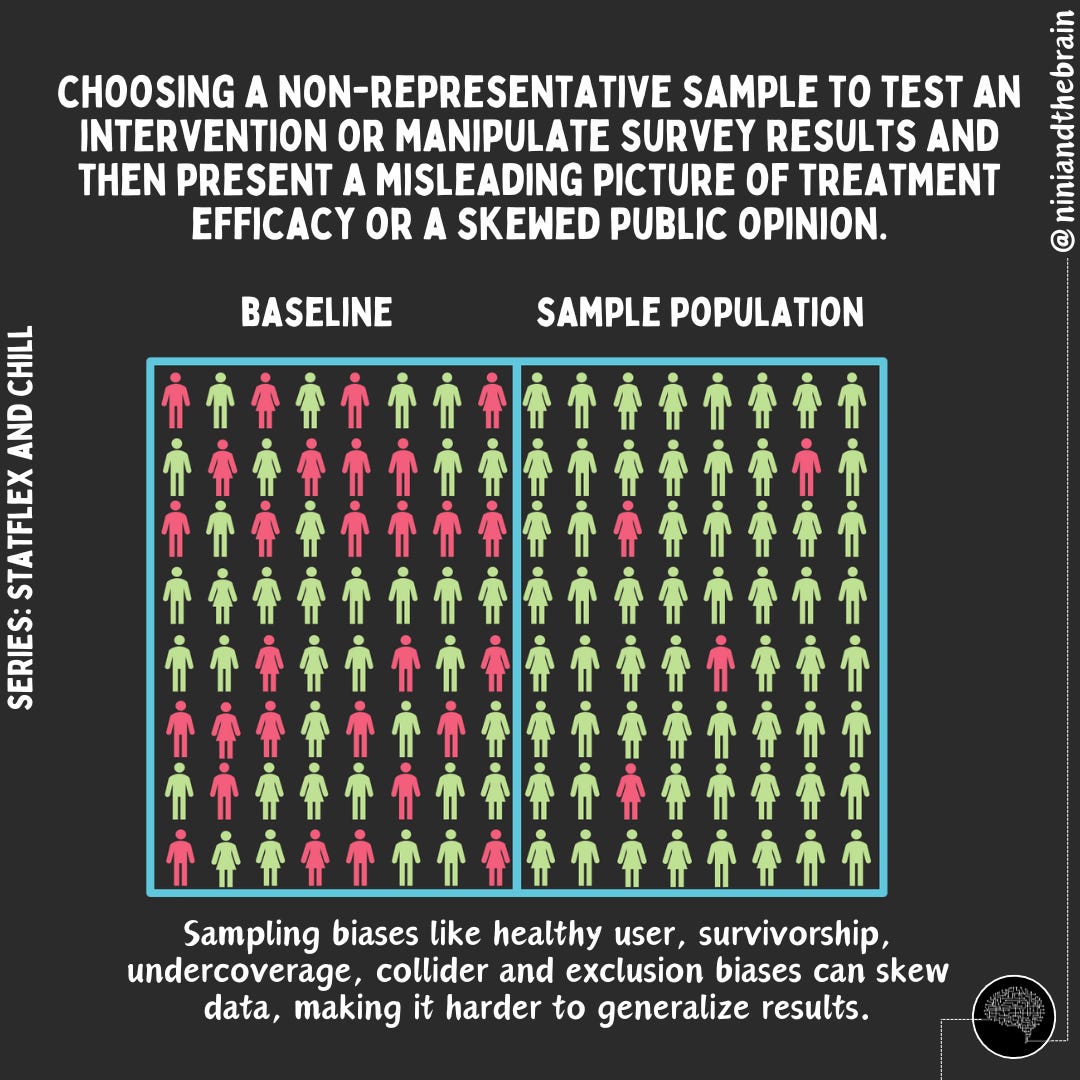

After animal studies in the preclinical stage, a drug clinical trial aims to determine whether an intervention (such as a new drug or treatment) is effective (does it work?) and to what extent (how well does it work). To answer these questions we look at statistical significance and effect size or clinical relevance (read on), respectively. To achieve this, a new intervention is compared against a placebo or an existing treatment in a group of patients who are randomly selected but similar in relevant characteristics. Randomization (the process of assigning participants or subjects in a study to different groups using a random method) ensures that the only significant difference between the groups is the treatment itself, minimizing bias and confounding factors. The sample should avoid any biases that would cause it to differ from the population it is meant to represent. Blinding—where participants and researchers do not know who receives the treatment or placebo—further reduces bias.

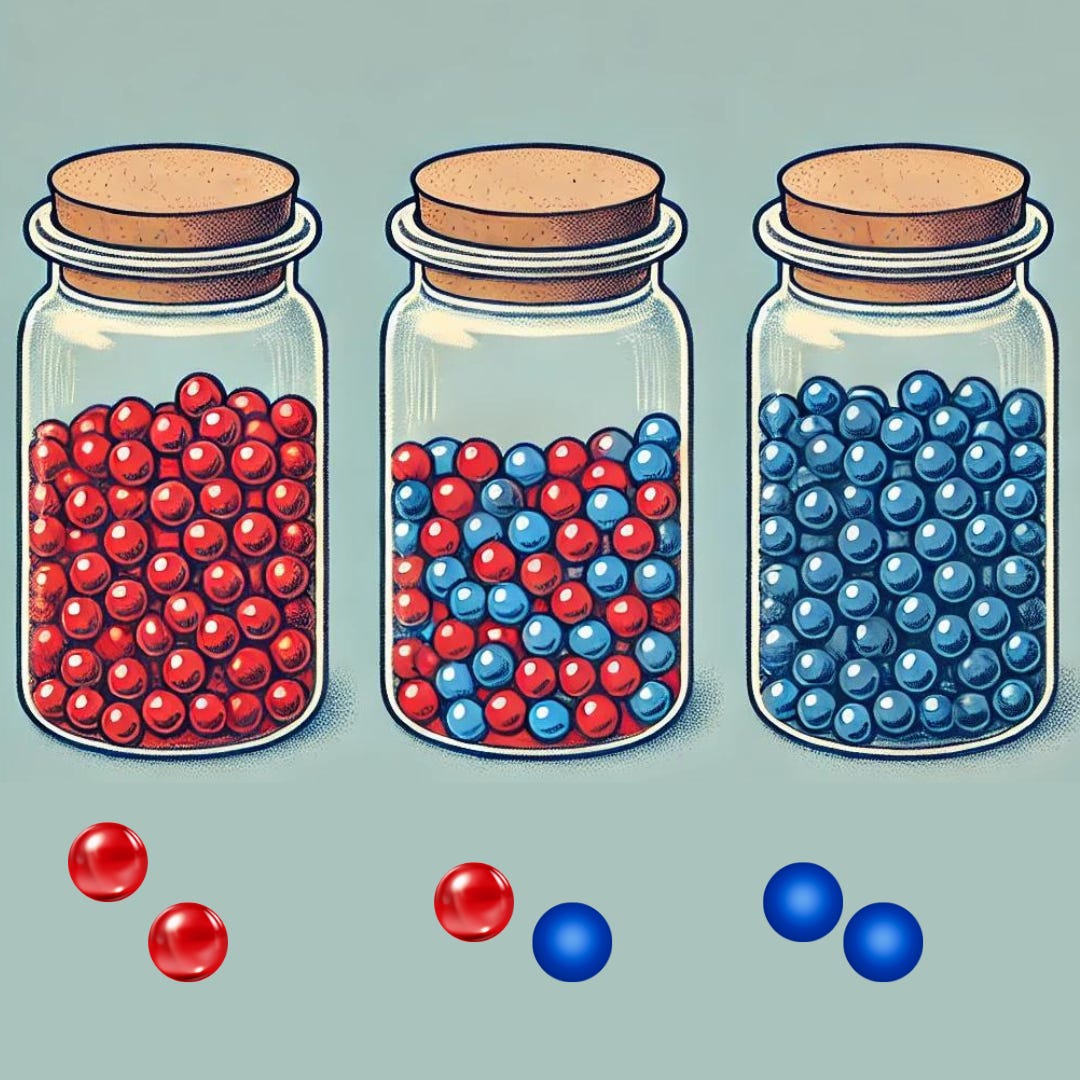

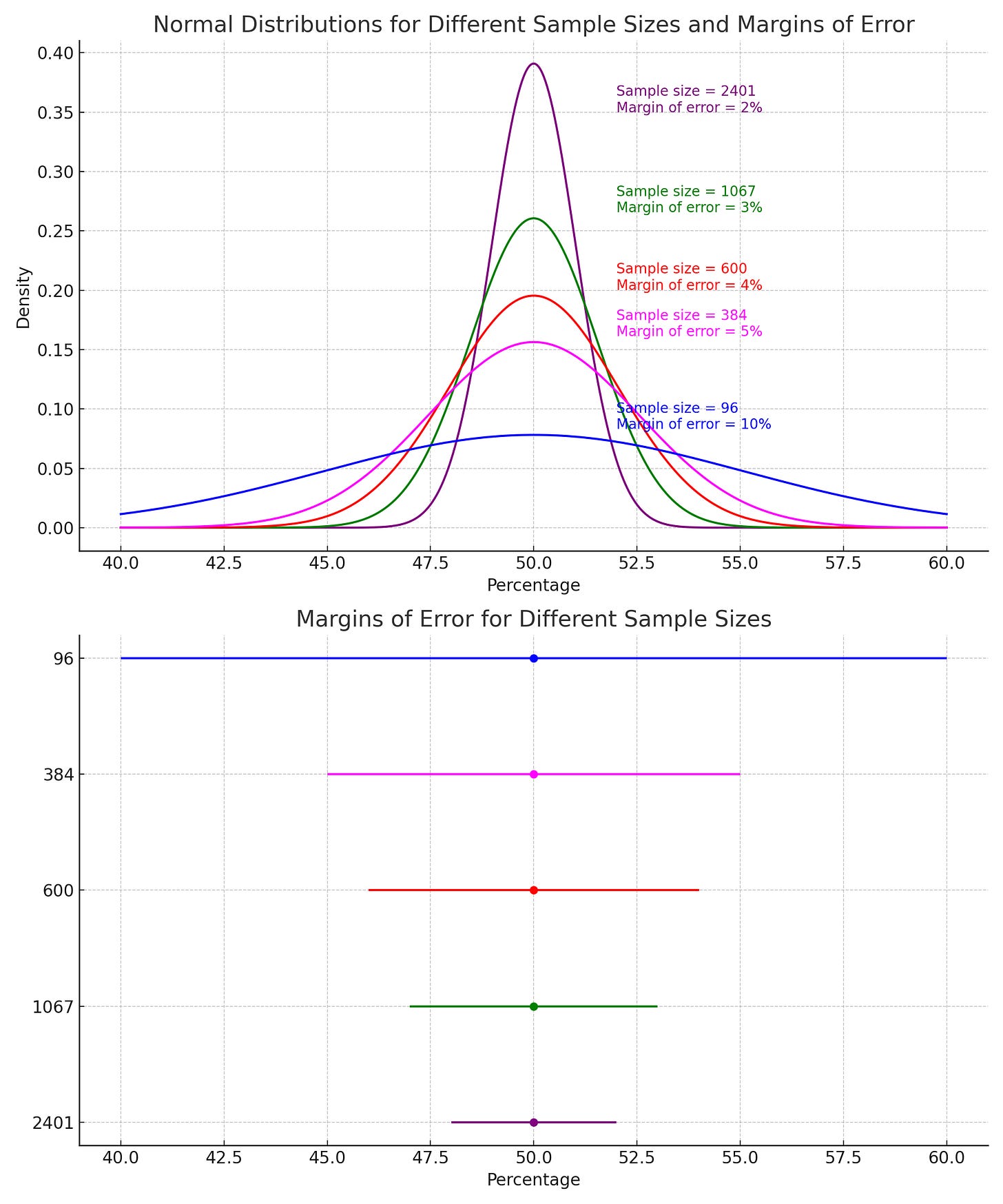

To ensure the results can be generalized to the intended population, a trial must include enough people. Sample size refers to the number of participants in the trial. A larger sample size increases the trial's power, or its ability to detect a true effect if one exists. Small sample sizes may produce unreliable results due to random variation or outliers. To understand, let’s use the 2-marble example.

Sample Size: The 2-Marble Example

Let’s assume we have a jar with 100 marbles, where 20% (20 marbles) are red, and 80% (80 marbles) are another color (e.g., blue). To estimate the proportion of red marbles, you randomly draw only 2 marbles from the jar.

There are three possible outcomes:

Both Marbles are Red: You would assume 100% of the marbles in the jar are red

One Red and One Blue Marble: You’d assume 50% are red (1 out of 2 marbles).

Both Marbles are Blue: You assume there are 0 red marbles.

With a sample size of just 2 marbles, the estimated proportions one might make (0%, 50%, or 100%) are all likely to be significantly different from the true population mean of 20% in the jar. This happens because the small sample size, 2 marbles, introduces a high level of random variation or sampling error.

Small Sample Size Increases Variability: When you only pick 2 marbles, each individual marble has a large impact on the calculated proportion. As a result, the estimated proportion can swing wildly from the true value due to random chance. But if you increase the number of marble samples, say to 10, now there are way fewer scenarios where you would pick all blue or all red and many more ways of picking 2 red marbles in a selection of 10. In fact, in a jar with 100 marbles, there are over 17 trillion ways to pick 10, and just about a third of them would represent the population mean: 20% red. That’s more representative of the mean than the 2-marble scenario!

Enter the Lipstick

One thing we care about figuring out during a clinical trial is whether the results are due to chance (are they noise). Imagine you are trying a new lipstick and trying to determine whether it lasts longer than others in the market. One group uses the new lipstick, and the other uses the current product. At the end of the trial, you find that the new lipstick appears to stay on longer by a small amount.

However, before concluding that this new lipstick is better, you need to determine whether the observed effect is real or simply due to random chance. Was the difference in color wear actually caused by the new lipstick, or could it have been due to variability in the participants (e.g., differences in lip shape or moisture, lighting conditions, how the lipstick was applied, or environmental/behavioral factors)?

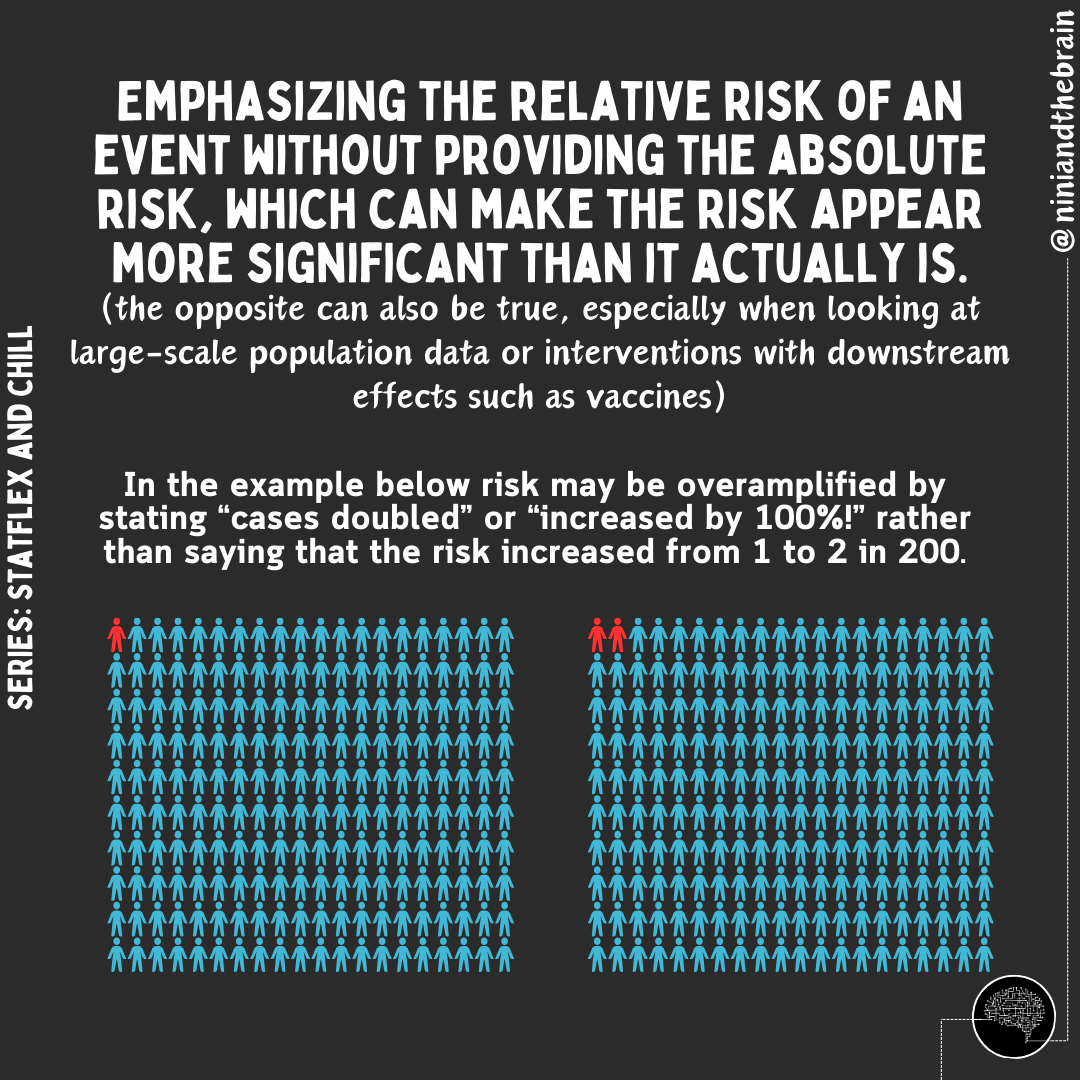

To determine this, you would perform a statistical test to calculate the p-value or statistical significance. A p-value tells you the probability of observing the effect (or something more extreme) purely by chance (see Hypothesis testing). But statistical significance alone isn’t enough. You also want to understand the effect size—how much longer does the new lipstick last?

Would you buy a lipstick that has 50% odds of lasting all day or would you buy one that has a 90% chance of lasting an extra 5 minutes?

These 2 results could technically be statistically significant (there was an effect), but if the effect size (how large of an effect or how well did it work) is tiny, even a statistically significant result might not be meaningful in a practical sense. When we talk about clinical relevance, we are talking about effect size.

Just because a difference is statistically significant doesn't mean it has a meaningful impact on the outcomes we care about. For instance, one study found that adding erlotinib to gemcitabine for advanced pancreatic cancer showed a statistically significant improvement (p = 0.038). However, the actual increase in survival was only about 10 days (6.24 months vs 5.91 months). While the difference exists, it's likely too small to justify the added risks of the drug. Also, how confident would you be in trusting a measurement that claims an extra 5 minutes of lipstick wear? Small effect sizes require both more validation and larger sample sizes due to their uncertainty.

Furthermore, a study needs to measure the right outcomes. The right outcome can be obvious, but not always. Suppose you decide to measure how long the lipstick lasts by counting the number of times a person has to reapply it throughout the day. While this may seem intuitive, it is actually incorrect and misleading as many other factors can influence re-application. It is the wrong outcome measure. A better outcome measure is a visual inspection (or specialized photo to measure intensity) or a more objective measure of how much lipstick is left after a certain time. This, of course, assumes standardized conditions.

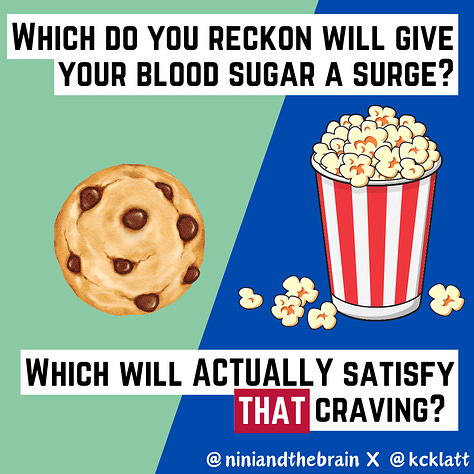

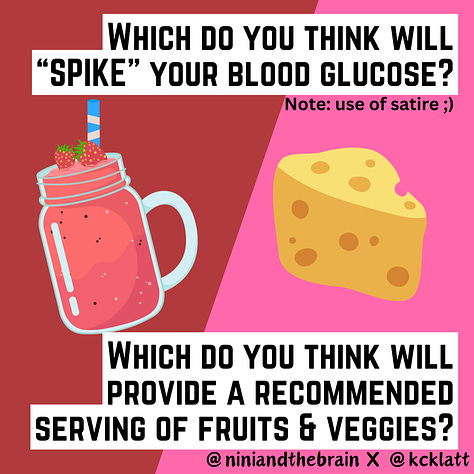

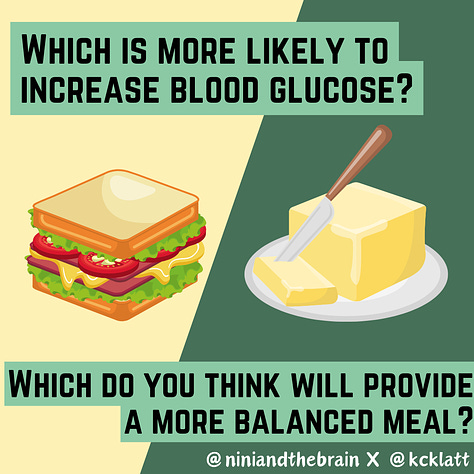

Similarly, one can measure many biomarkers, but that doesn’t imply they tell us much about the true effect of an intervention on our health. This is why continuous blood glucose monitoring is clinically irrelevant for those without diabetes. Blood sugar fluctuations and spikes are normal and are “not a measure of a risk for quality of life or risk of chronic disease” (K. Klatt). Consequently, there is scant evidence for improved health from measuring in this population.

Hypothesis Testing - Did the lipstick really last longer?

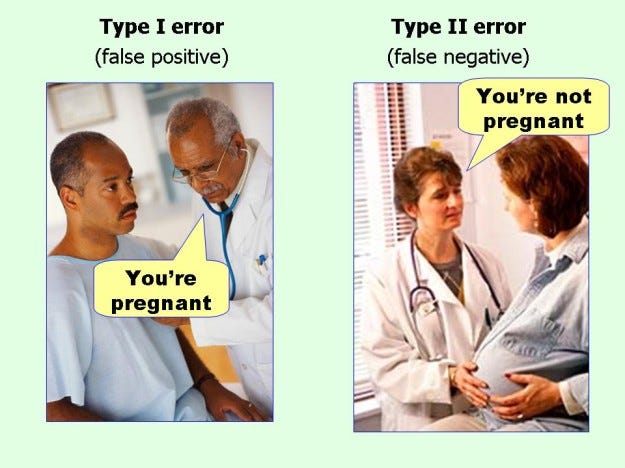

Errors can occur when evaluating the effects of an intervention, even in well-intended study designs. Statistical hypothesis testing relies on probabilities, so no test is ever 100% certain, and two types of errors can occur: Type I and Type II errors.

A Type I error, or false positive, happens when a test incorrectly concludes there is an effect or difference when there isn't one (you conclude that the lipstick lasted longer, when it really didn’t). A Type II error, or false negative, occurs when a test fails to detect a real effect or difference that does exist (the test didn’t detect that the lipstick lasted longer).

Hypothesis testing starts with the assumption that there is no difference or relationship (the null hypothesis). It is paired with an alternative hypothesis, which suggests there is a difference or relationship. For example, if you are testing whether a new lipstick can last longer than another:

The null hypothesis (H0): The new lipstick results in no change in duration.

The alternative hypothesis (H1): The lipstick has a visible change over the old lipstick

Type I errors occur due to random chance or deviations from the planned sample size and duration. This risk is controlled by the significance level (alpha, α), usually set at 0.05, which indicates a 5% chance of making a Type I error. A test with a 95% confidence level means that there is a 5% chance of getting a type 1 error.

Type II errors occur when the test fails to detect an actual effect, often due to insufficient statistical power, which is the ability of the test to detect a true effect when it exists. You can think of statistical power as being kind of like the power of a magnifying glass- the more powerful the lens, the easier it is to spot the difference between two observation. Power is affected by the effect size, measurement error, sample size, and significance level. Higher statistical power (typically 80% or above) reduces the likelihood of Type II errors.

The Interaction of Sample Size, Effect Size, and Uncertainty

If the expected effect size (how well something works) is small, a larger sample size is needed to achieve adequate power to detect it. Conversely, a large effect size may require a smaller sample to achieve sufficient power.

Researchers often perform a power analysis to calculate the required sample size based on the expected effect size, desired statistical power (usually 80%), and significance level (usually 0.05; note that if you do multiple comparisons, you are more likely to get at least one false positive, so, in this case, you have to lower the significance level accordingly). This ensures that the study is designed to have a high probability of detecting an effect if it exists.

Even when one does measure an effect that is statistically and clinically significant, that measurement will have uncertainty. The uncertainty is a good gauge of how well the intervention works. A large part of statistics involves making inferences about the characteristics of an entire population — all possible observations — based on a smaller sample (and measurements) of actual observations.

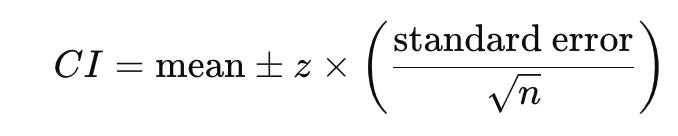

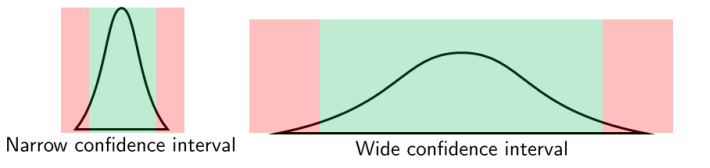

When we measure the effect of an intervention we don’t usually get a single value, but rather a range of values across the different individuals in the study. The best single estimate of the treatment effect based on the trial data (e.g., the average reduction in blood glucose levels) is called the point estimate. A confidence interval (CI) is a range of values that tells you how sure we are about an estimate in statistics, such as the average or effect size in a study. In other words, considering the amount of data we've collected and the variability within the measurements of that sample, how far might the true mean be from the sample mean? Think of it like a "safety net" around a number that shows where the true value is likely to fall. Citing Stanley Chan from Purdue (an EE professor) “…from the data, you tell me what the central estimate or X is. I then ask you, how good is X? Whenever you report an estimate X, you also need to report the confidence interval. Otherwise, your X is meaningless.”

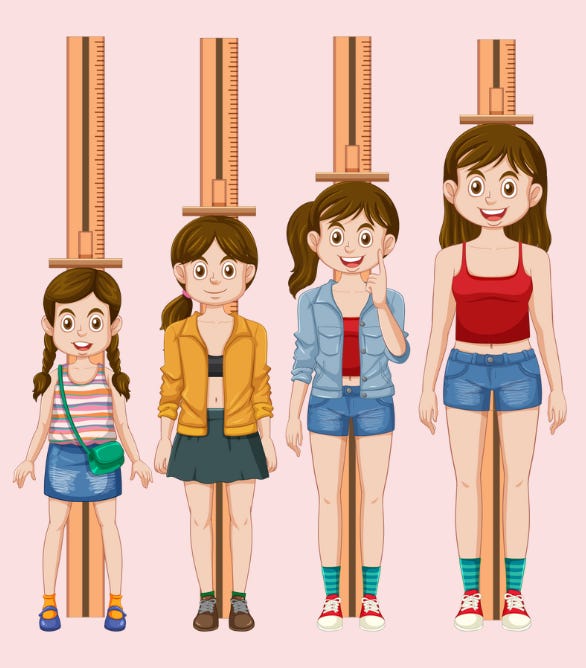

Imagine you're trying to guess the average height of all the people in a city. You can't measure everyone, so you measure a smaller group (a sample) and calculate the average height from that group. But since you didn’t measure everyone, there's a chance your average might not exactly match the true average of the whole city.

A confidence interval helps account for this uncertainty. If your sample’s average height is, say, 5 feet 6 inches, a 95% confidence interval might tell you that the true average height for the whole city is likely between 5 feet 5 inches and 5 feet 7 inches.

The true meaning of a confidence interval relates to the idea of repeating an experiment multiple times. Given a certain confidence level 𝛾, the CI is a random interval that contains the parameter being estimated 𝛾% of the time. If we say we are 95% confident, it means that if we took 100 different samples and calculated their confidence intervals, about 95 of those intervals would contain the true average height of the whole city. For the scenario with the marbles, a 95 percent confidence interval means that if we repeated the experiment 100 times, the probability of getting 20% red would fall within that interval in 95 of those trials. See again why that is not possible with picking just 2 marbles?

In our marble example above, we might conclude from picking 2 marbles that the jar is 50% red and 50% blue. When we pick only 2 samples, our estimate of 50 percent has a very wide confidence interval because our sample size is just too small. In other words, when we have fewer numbers, we can be less sure exactly where our mean is, so our confidence interval around it is larger. Our estimate with 10 marbles would have a much narrower confidence interval.

Confidence intervals help us understand how reliable and accurate an estimate is. When the sample size is large, even small differences or effects between groups can be detected as statistically significant because we capture more of the variation in the population. In contrast, with a small sample size, the study might not have enough power to detect these differences, leading to a false conclusion that there is no effect. Larger samples provide more accurate and stable estimates of the population parameters (like means or proportions). A larger sample size reduces the standard error (a measure of variability in the sample estimate), leading to narrower confidence intervals. With fewer data points, as in our marble example above, the results are more likely to be skewed by outliers or random fluctuations, leading to less reliable conclusions and more uncertainty. Smaller samples generate wider confidence intervals or uncertainty around the estimated effect, making it harder to determine whether the observed effect is statistically significant or due to random variation and evaluate the true strength of the intervention. With more uncertainty in the estimate, this suggests that the study’s results might not accurately represent the true effect and may vary widely if the study is repeated. This undermines the validity of the findings because the true effect could be quite different from what the study estimates.

Whew, ok DONE! Now that we have explained those basic statistical concepts, let’s discuss the studies cited.

TECHing Apart the Claims: A VERY Deep Dive

First claim: Eriomin® Lemon Fruit Extract boosted GLP-1 levels by 17% in 12 weeks:

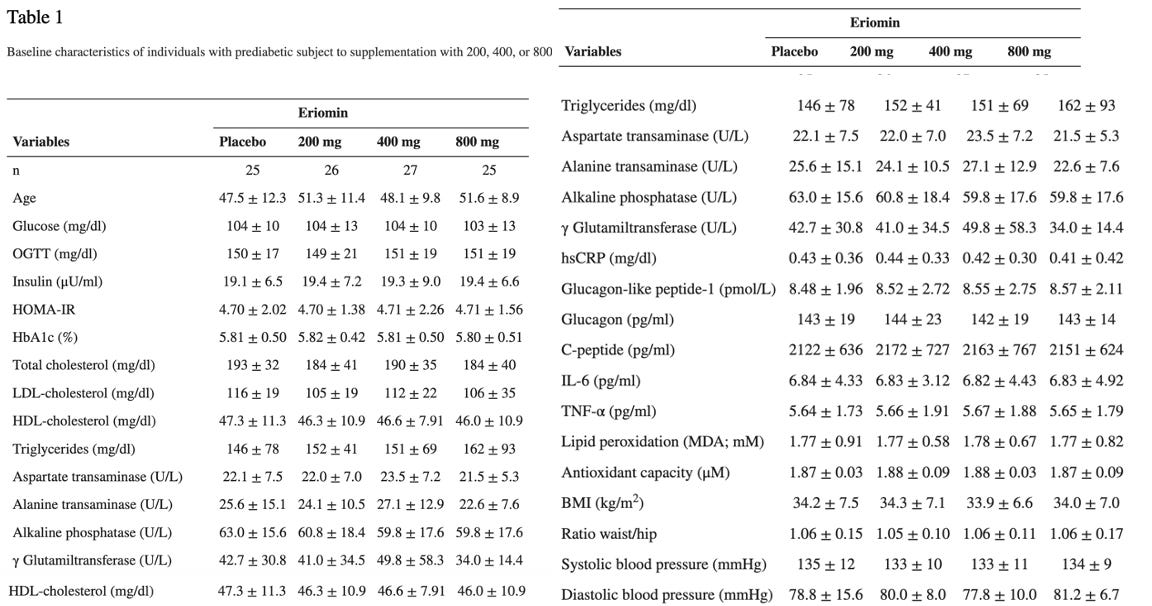

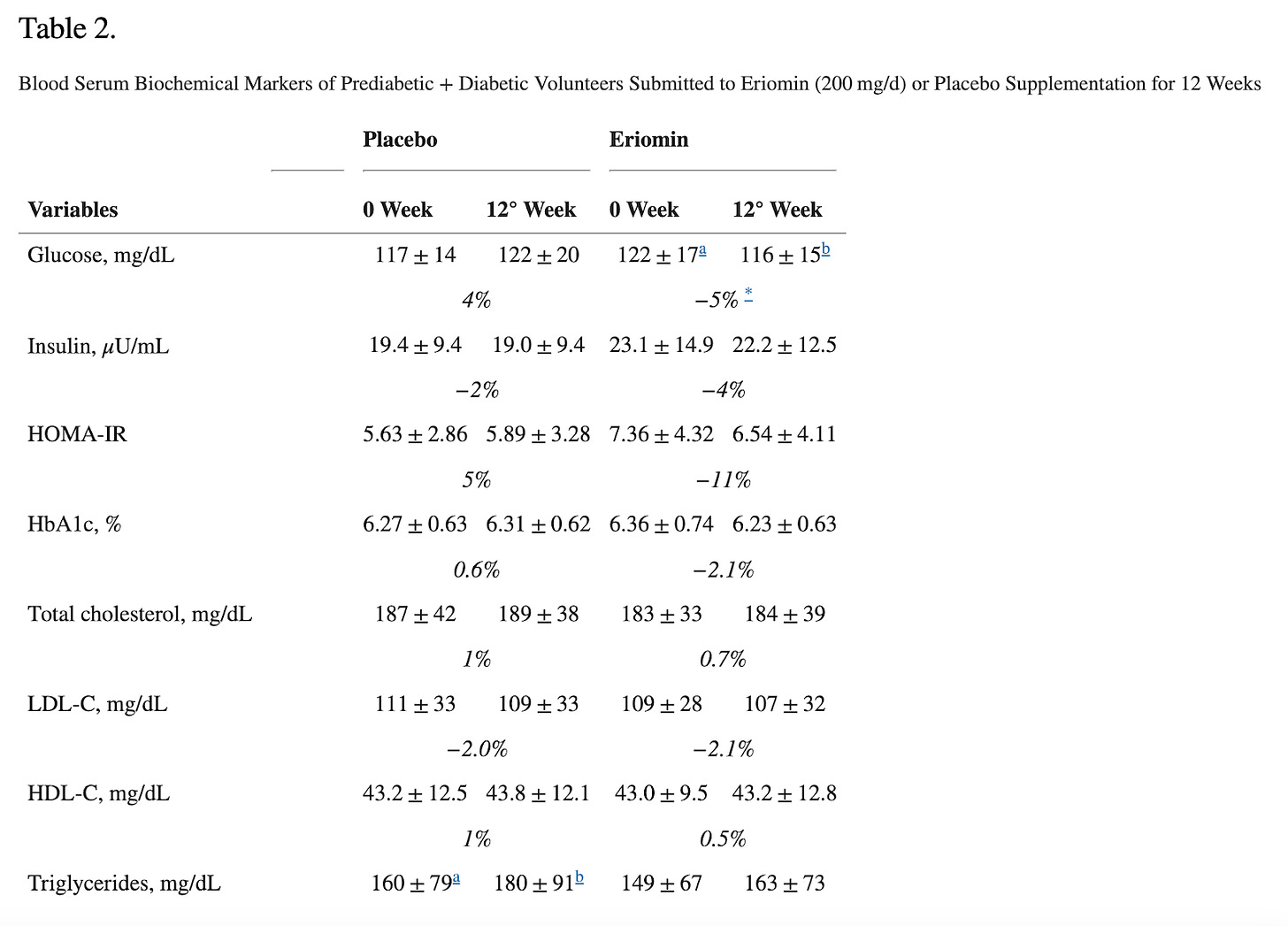

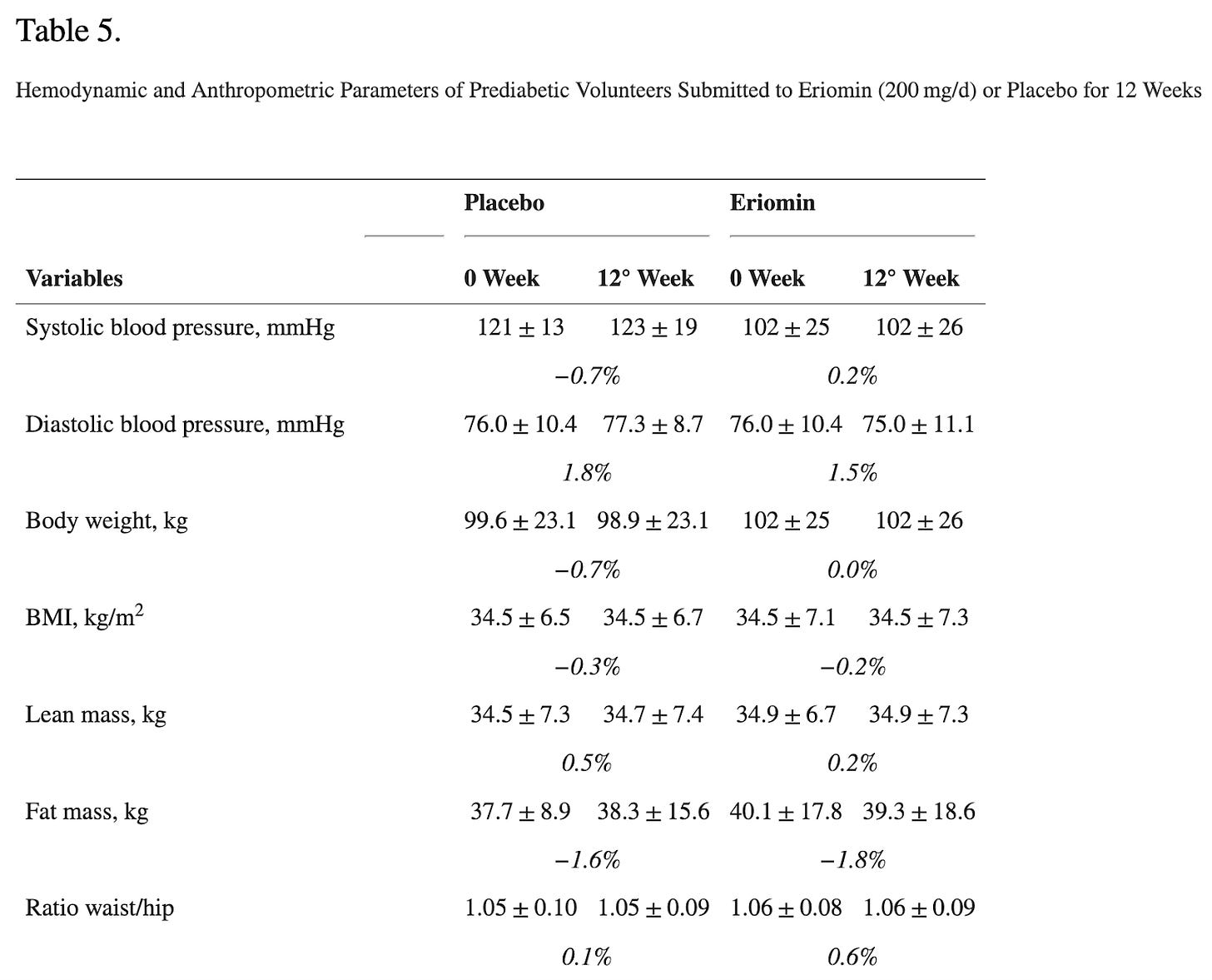

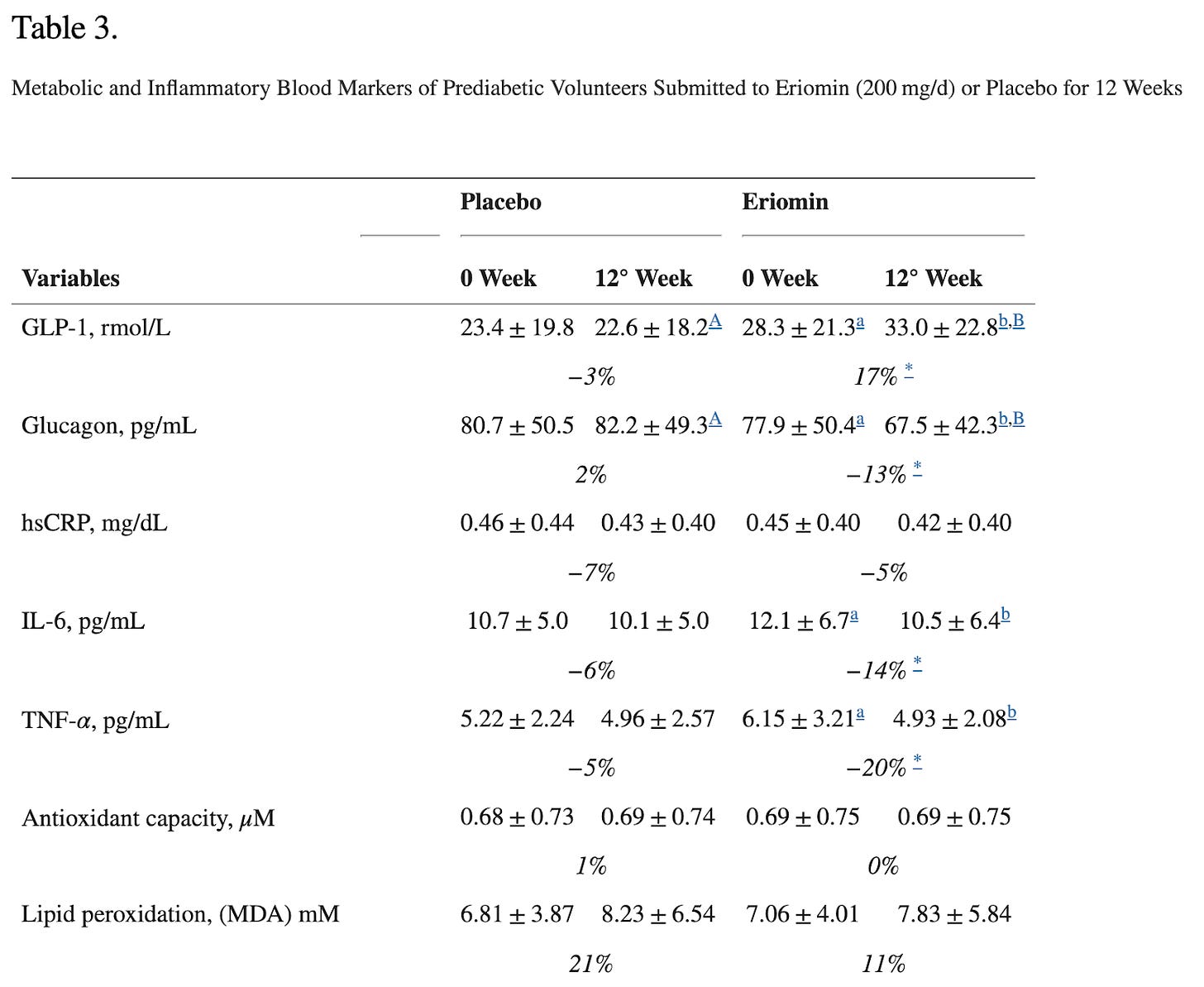

The study they are referring to evaluated the efficacy of Eriomin, a citrus flavonoid, in reducing hyperglycemia and improving diabetes-related biomarkers in 30 individuals with elevated blood glucose. Over 12 weeks with a crossover design, participants received either 200 mg/d of Eriomin or a placebo.

The primary outcome (what the study was designed to measure) was fasting blood serum glucose. The secondary outcomes (other results the study looked at but was not designed around- these can be the basis for additional studies) were insulin, HOMA-IR, HbA1c, GLP-1, Glucagon, total cholesterol, triglycerides, HDL-C, low-density lipoprotein-cholesterol (LDL-C), ALP, AST, ALT, γGT, TNF-α, IL-6, hsCRP, antioxidant capacity and serum lipid peroxidation, body weight, body mass index (BMI), muscle mass, fat mass, and systolic and diastolic blood pressure.

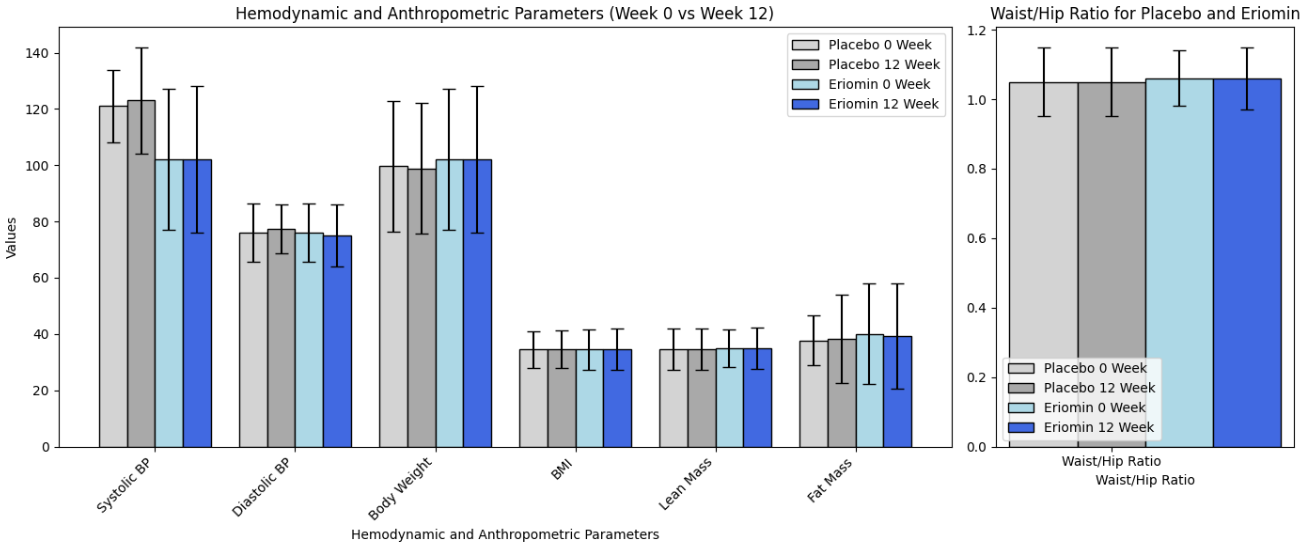

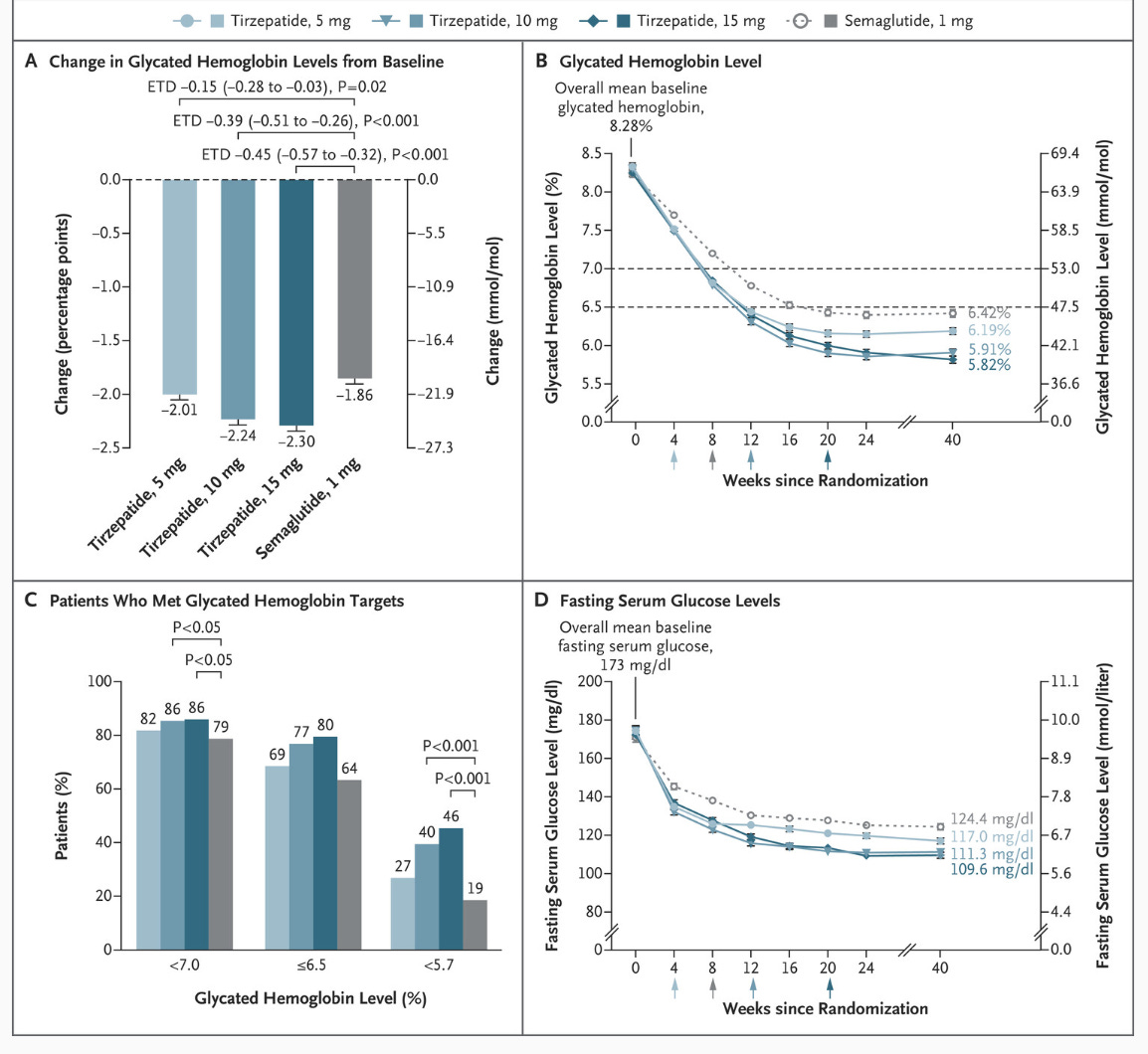

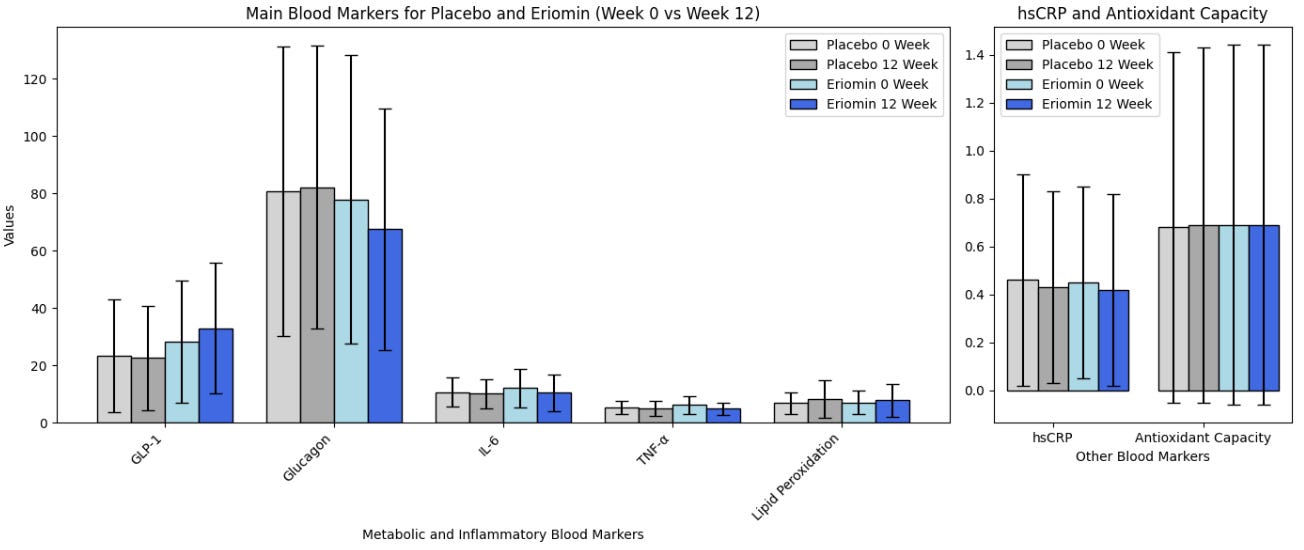

According to the authors, Eriomin led to a 5% reduction in blood glucose, improved insulin resistance, increased GLP-1, and reduced inflammation (IL-6, TNF-α). The plots below summarize the results (tables with numerical values are found in the footnotes).

Let’s get right to the heart of it: This did NOT lead to weight loss or differences in waist/hip ratio! That’s because, as we have written before, GLP-1 is quickly broken in the body and GLP-1 drugs aren’t one’s intrinsic GLP-1 for that reason, but rather GLP-1 agonists that stimulate the receptors and mimic the effects of our flimsy GLP-1. In all, the outcomes measured (blood glucose, etc.) are not analogous to weight loss.

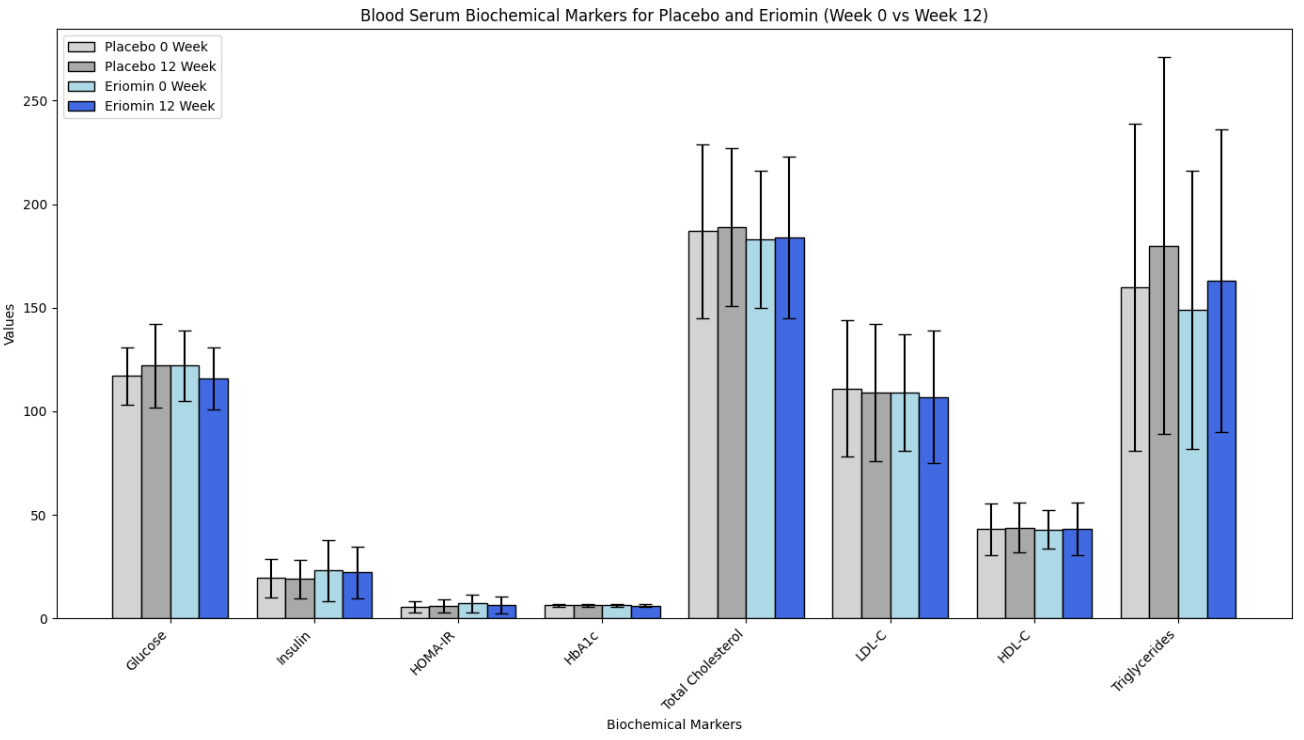

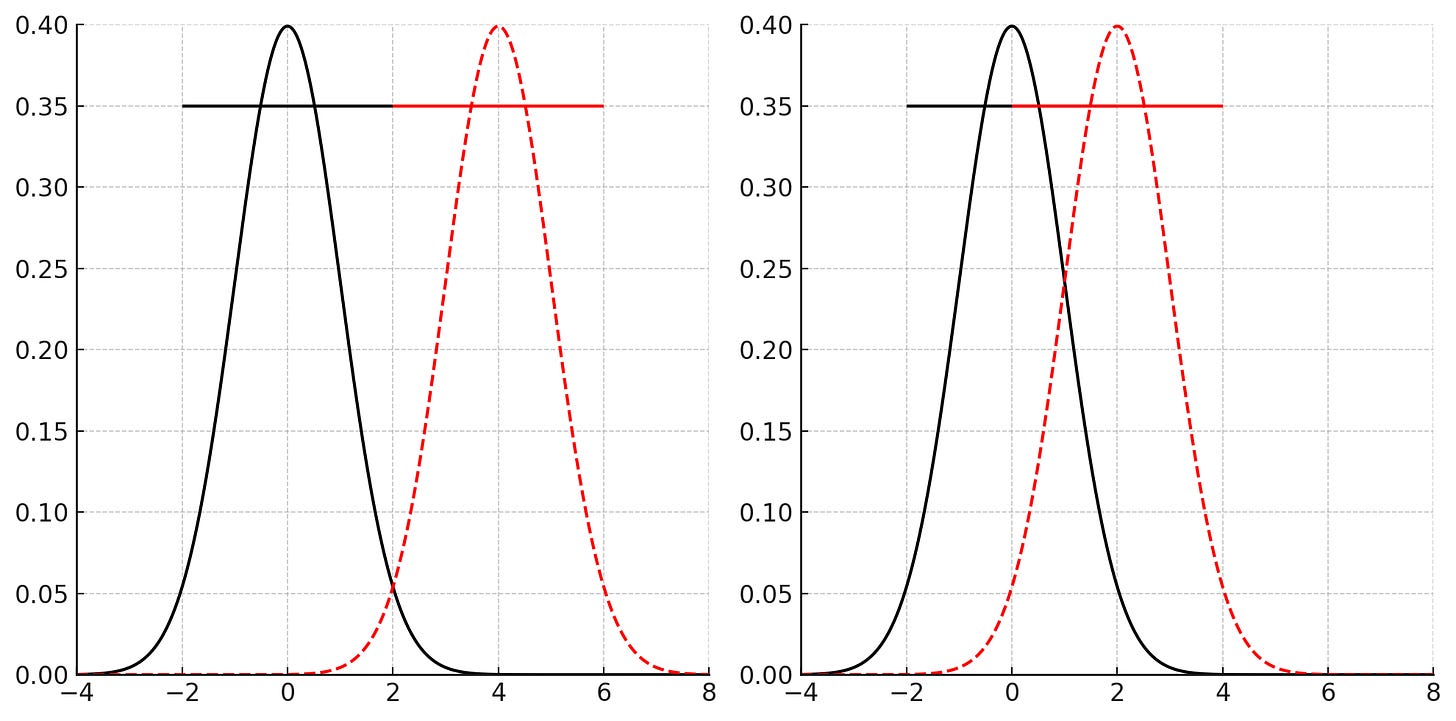

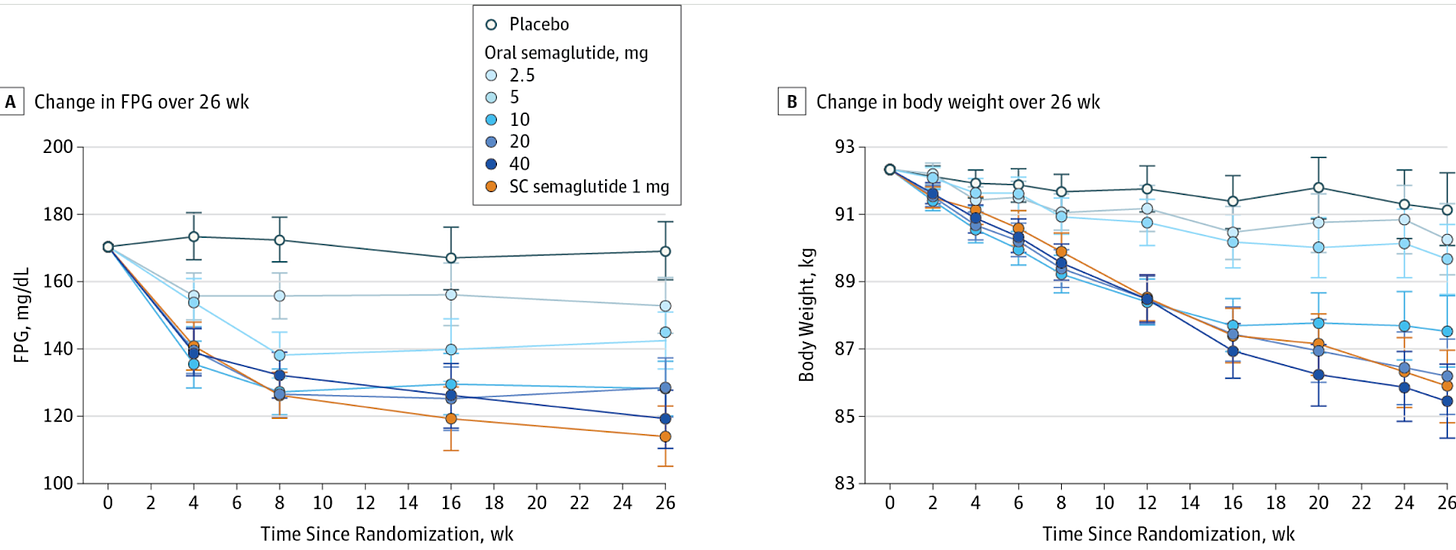

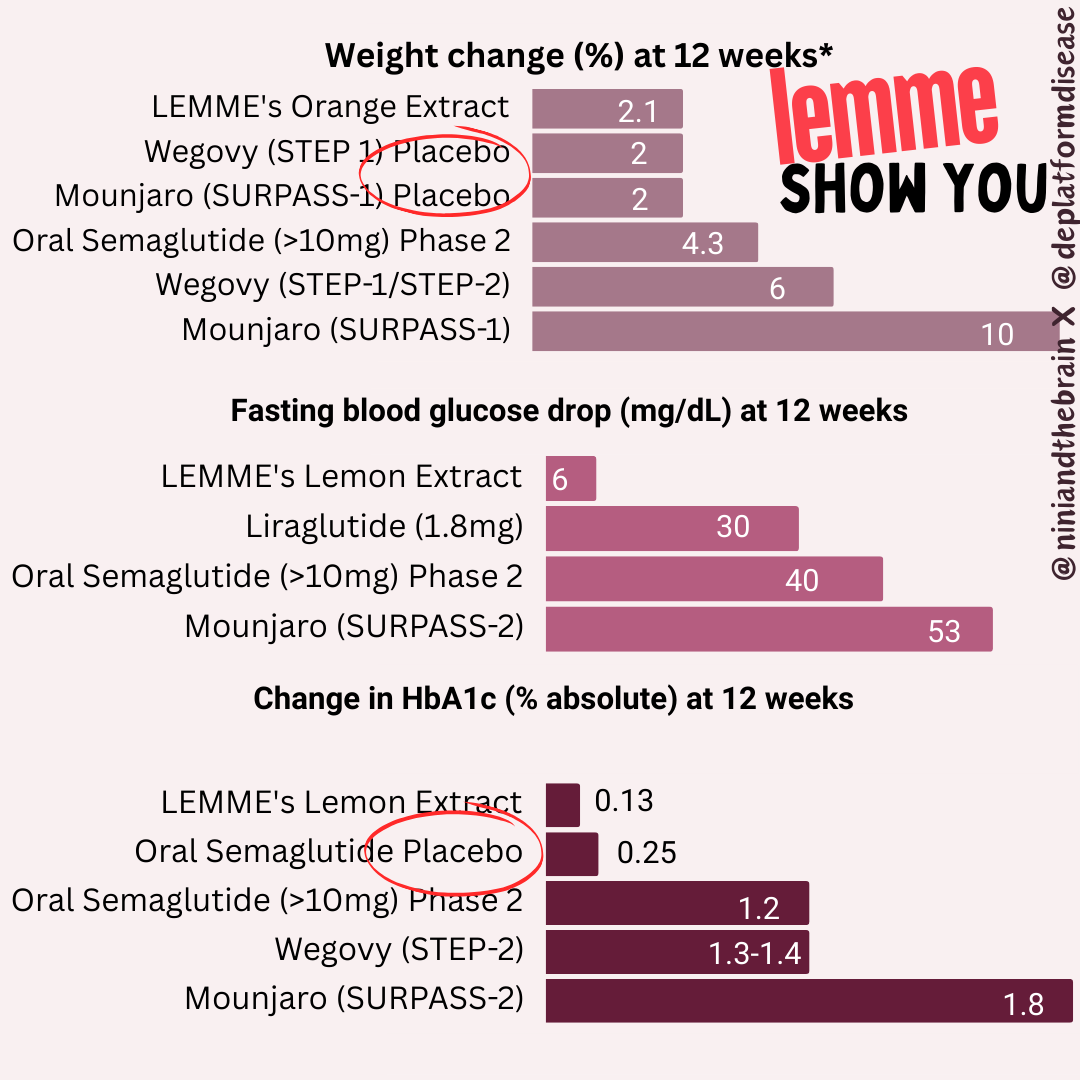

Second, typically FDA-approved drugs significantly lower fasting blood glucose levels. For instance, semaglutide and liraglutide can decrease fasting glucose by around 20-40 mg/dL (1.1-2.2 mmol/L), depending on the dose and patient profile. Mounjaro has been found to lower blood glucose by roughly 50 mg/dL by week 12 (see graphs below) in the SURPASS-2 trial! The values provided here for the intervention are 6 mg/dL, with a confidence interval of 15! What does that mean?

The small effect size (6 mg/dL) combined with a wide (relative to the change) and overlapping confidence interval (±15 mg/dL) , along with the small sample size all suggest that there is a lot of uncertainty about the true effect of the intervention. Since the confidence interval includes both a rather large positive and negative range around the estimate (Example for glucose:116 ± 15), it also implies that the intervention could potentially result in a substantial increase in glucose levels rather than a decrease. Put another way, the effect of Eriomin measured in this study is consistent with anything from a ~20 mg/dL decrease in blood glucose level to an increase of ~10 mg/dL- we have no way of knowing whether it will increase or decrease blood glucose from these data. Remember, effect size is a measure of how well an intervention works. This doesn’t work well enough to make the results reliable (can they be reproduced?), especially amongst a few participants. It is like our two-marble problem.

Too Good to be True?

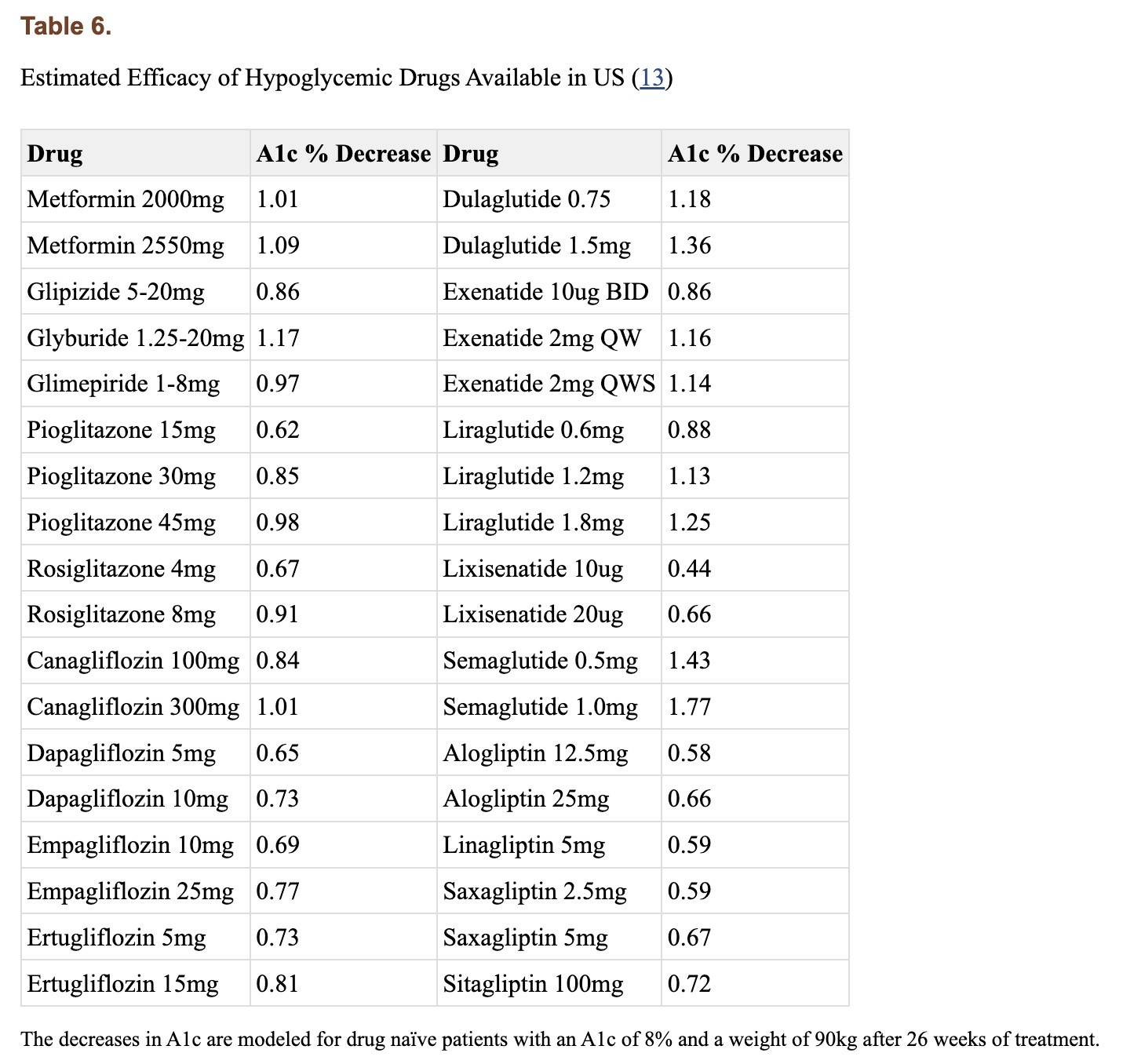

Interestingly the authors claim Eriomin lowers HbA1c levels by a whopping 2.1%, which is a massive change. Let’s break this down. Blood sugar can be measured directly, but that only gives you a snapshot influenced by factors like when you last ate or took insulin. To get a longer-term view, we use hemoglobin A1c (HbA1c), which reflects average blood sugar over the past few months. Glucose in the body can undergo an irreversible reaction with the hemoglobin in our red blood cells (called glycation), forming HbA1c, so higher blood sugar levels lead to higher HbA1c. The measure is not perfect, and many factors can skew it up or down (for example, conditions that shorten the lifespan of red blood cells can lower it), but it’s helpful to get a sense of long-term blood glucose levels. For context, a healthy HbA1c is normally considered to be between below 5.7% (some slight variation based on labs), values above 6.4% are consistent with diabetes, and a value of 7.0% is associated with a substantially higher risk of vascular complications. For a drug to lower it by 2.1% is on par with and above some of the most effective diabetes interventions out there. Though they have small sample sizes, multiple trials for bariatric surgery have shown it to reduce HbA1c by 2-3% or more in patients with BMI 25-53. For reference, below are the effect sizes of a number of diabetes medications.

For an herb (or even a drug) to produce an effect of this magnitude over this short period of time is very suspicious and raises concern that rather than regulating blood glucose, it is interfering with the assay that measures hemoglobin A1c, there is a measurement error, or maybe even shortening the lifespan of red blood cells. But, upon closer look, we can notice the authors report a relative change to the baseline values, not an absolute change, as it is typically reported, thus the use of the word nonsignificant. In other words, there was no meaningful change to hemoglobin A1c.

Another red flag is that the authors report hemoglobin A1c values to two decimal places, while the commercial test they used appears to only provide results to a single decimal place. Other studies reporting use of this test report results only to a single decimal place. This cast further doubt onto the validity of the measurements reported.

When reporting absolute risk or change, we provide the actual likelihood or probability of an event occurring within a specific group, expressed as a percentage, probability, or number. For example, if 2 out of 100 people develop a disease, the absolute risk is 2%. In HbA1c changes, absolute changes are used because the metric itself is given as a percentage. On the other hand, relative risk compares two groups—typically exposed versus non-exposed—showing how much more or less likely an event is in one group. In this case, the authors reported a 2.1% relative change in HbA1c (from 6.36 ± 0.74 to 6.23 ± 0.63), but it should have been reported as a 0.13% absolute change. For comparison, the placebo group in oral semaglutide trials saw a roughly 0.2% drop in HbA1c over 12 weeks.

For reference here is how the report change in HbA1C is reported in patients with Type 2 Diabetes taking oral semaglutide compared with a placebo and subcutaneous semaglutide group.

Strength of the intervention: When evaluating a pharmaceutical intervention, early studies focus on determining the optimal dose by testing various doses to find the best balance between effectiveness and side effects. A key issue with this study (and the other 2) is the lack of investigation into the strength of the intervention. Typically, a dose-response effect should be evaluated to confirm the cause-effect relationship, as seen in FDA-approved drugs for weight loss and glycemic control, but this wasn't done here.

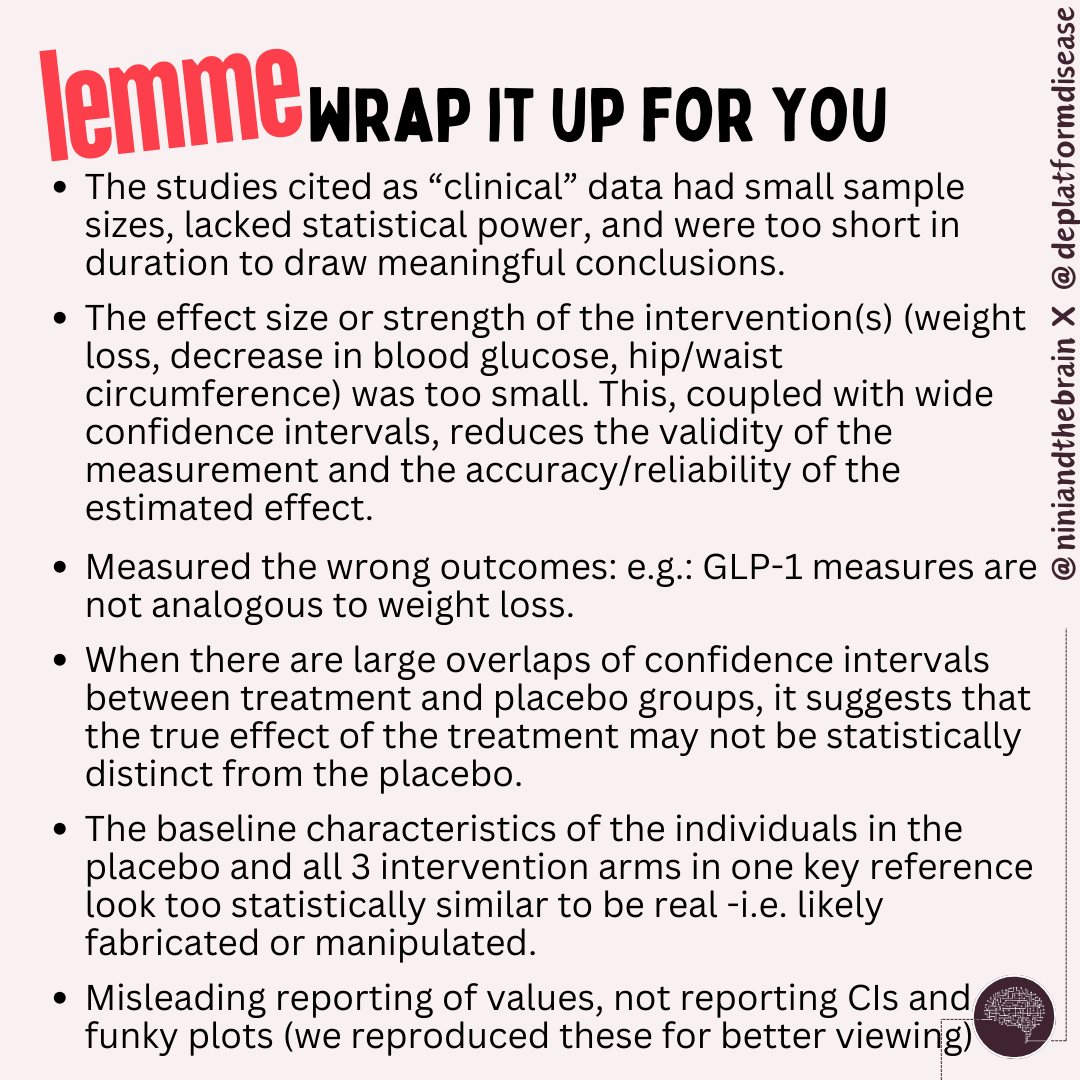

Why the Study is Insufficient to Make Claims: A Summary

Small sample size: With only 30 participants completing the study, the statistical power is limited, raising concerns about the generalizability of the findings. They calculated the sample size based on the results of a prior paper. Interestingly, but not surprisingly, at first glance, the baseline characteristics of the individuals in the placebo and all 3 intervention arms in that paper look so statistically similar (too similar), that brings into question the validity and reliability of the data here too (no two randomized populations would show such similar characteristics across all measured traits including BMI and waist/hip ratio - this is extremely suspicious for data fabrication). What are the odds that 4 randomized groups are so similar across these many variables (hint: low, VERY low)? Second, that paper suffers from many of the same shortcomings as this one, so using the effect size of that paper to calculate the needed sample size for this one is an unreliable assumption, at best.

Placebo effect and crossover design: A crossover study design introduces the possibility of carryover effects, even with a washout period, which could bias results. The study’s short duration also increases the odds of this happening.

Modest reductions in key markers: The reduction in fasting blood glucose (6 mg/dL) was small, and not even clearly a true reduction based on the confidence intervals.

Misleading statements: The reduction of HbA1c was reported as a relative change, which is inconsistent with how it is reported in the literature.

Wrong outcome measures: GLP-1 measures are not analogous with weight loss.

When confidence intervals (CIs) overlap between treatment and placebo groups, it suggests that the true effect of the treatment may not be statistically distinct from the placebo. This reduces the likelihood of detecting a true difference or effect, leading to a higher chance of a Type II error, which is consistent with the small central estimate (-6mg/dL) of the change in fasting blood glucose values. The intervention has a weak or inconsistent effect on glucose levels. This weakens the evidence for the claim that Eriomin produced meaningful improvements in glucose control or inflammation.

For reference, below is the change in weight and fasting blood glucose as is reported in patients with Type 2 Diabetes taking oral semaglutide compared with a placebo and subcutaneous semaglutide group. Notice how the CIs don’t overlap much (or at all) and overlap less and less relative to placebo with the increasing strength of the intervention. Low overlap corresponds to a larger effect size, which increases the test's ability to detect differences even with smaller sample sizes (ie: increased statistical power).

Insufficient duration: This one is important. Just as important as weight loss is the duration of the sustained effect.

Second claim: Supresa® Saffron Extract showed 69% decreased hunger and 65% reduced sugar cravings in 8 weeks.

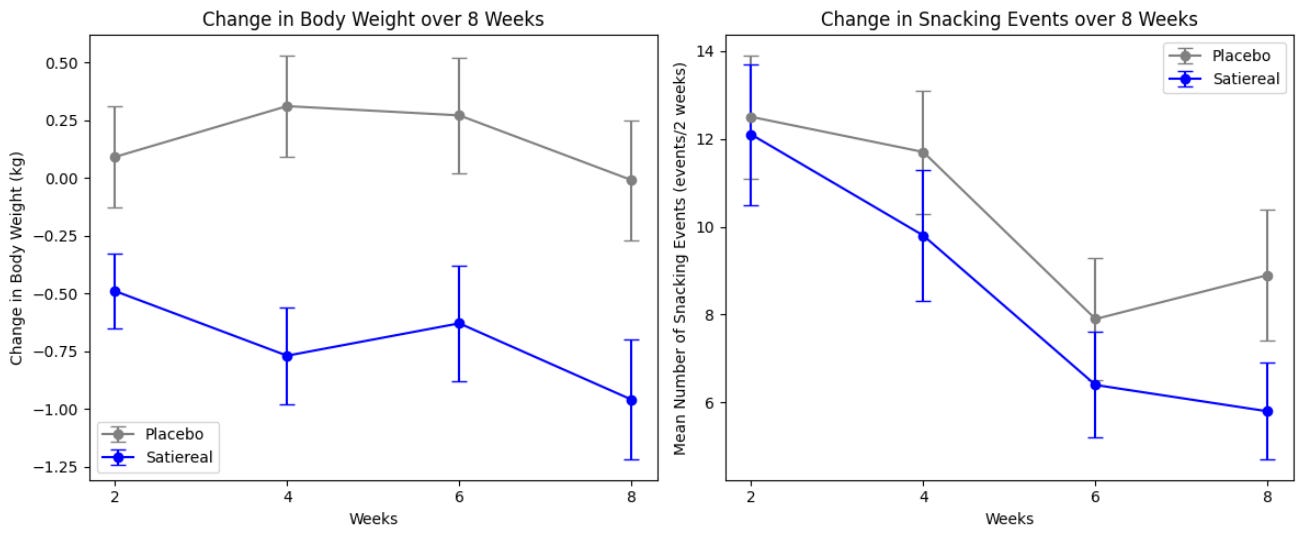

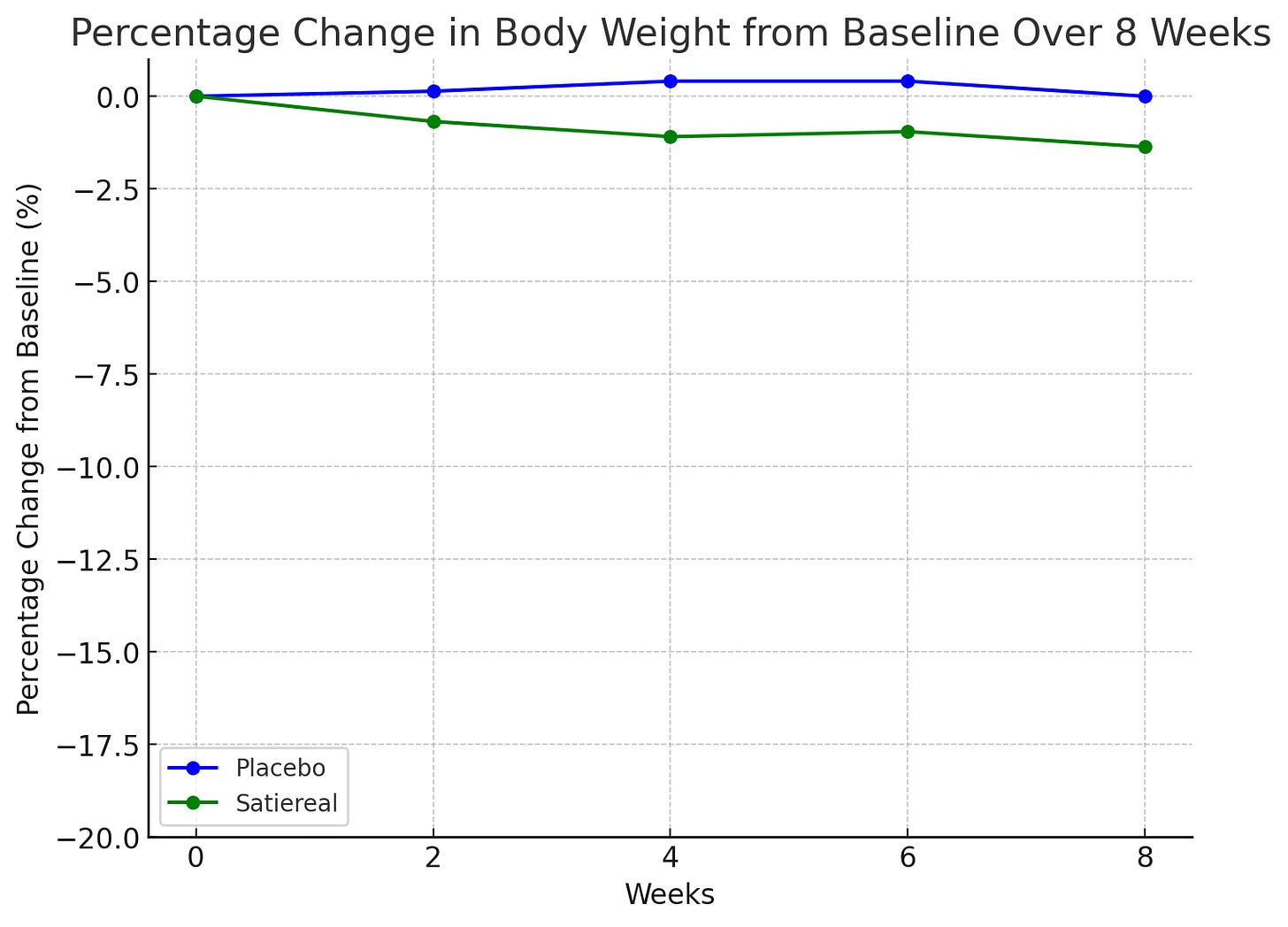

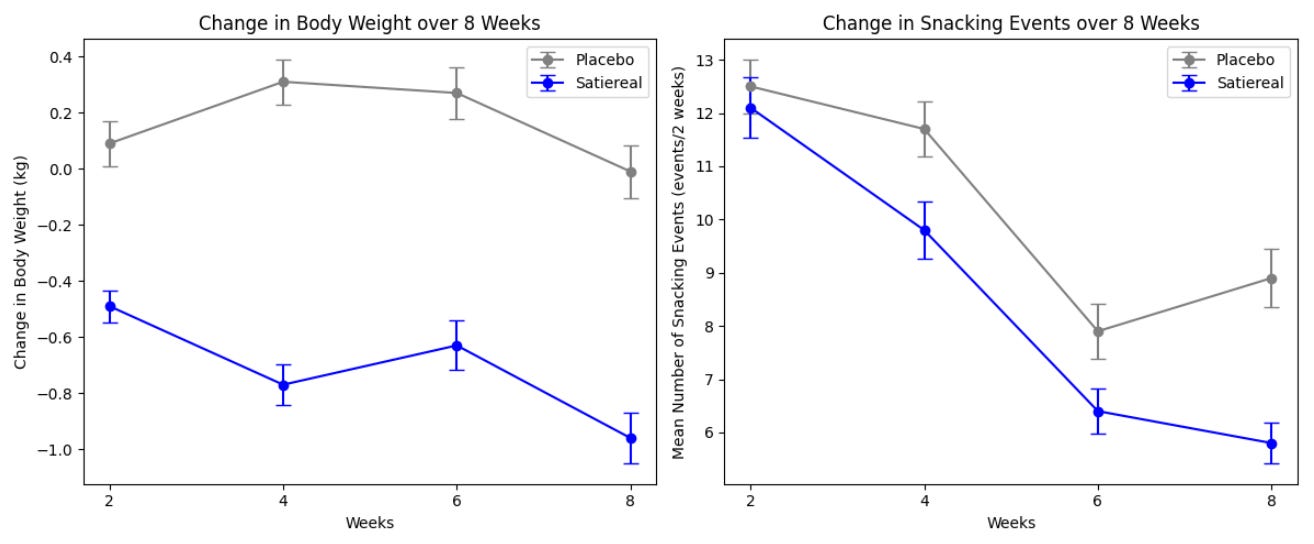

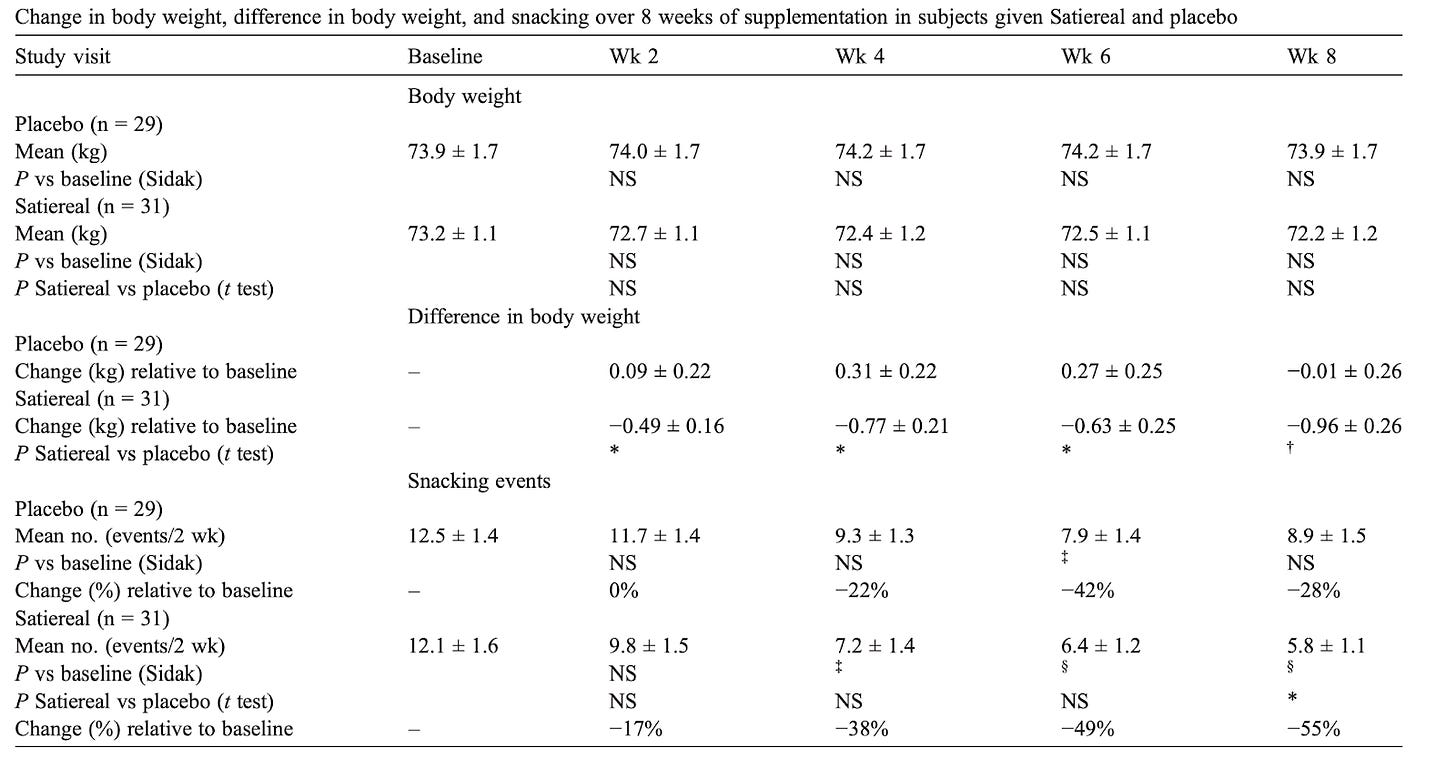

This study investigated the effects of Satiereal, a novel saffron stigma extract, on snacking behavior and weight loss in healthy, mildly overweight women (BMI 25-28). The randomized, double-blind, placebo-controlled trial involved 60 participants who took either Satiereal (176.5 mg/day) or a placebo for 8 weeks, without restrictions on caloric intake. The study found that Satiereal significantly reduced body weight and snacking frequency compared to the placebo (P < .01 for weight loss and P < .05 for snacking frequency). Or did it?

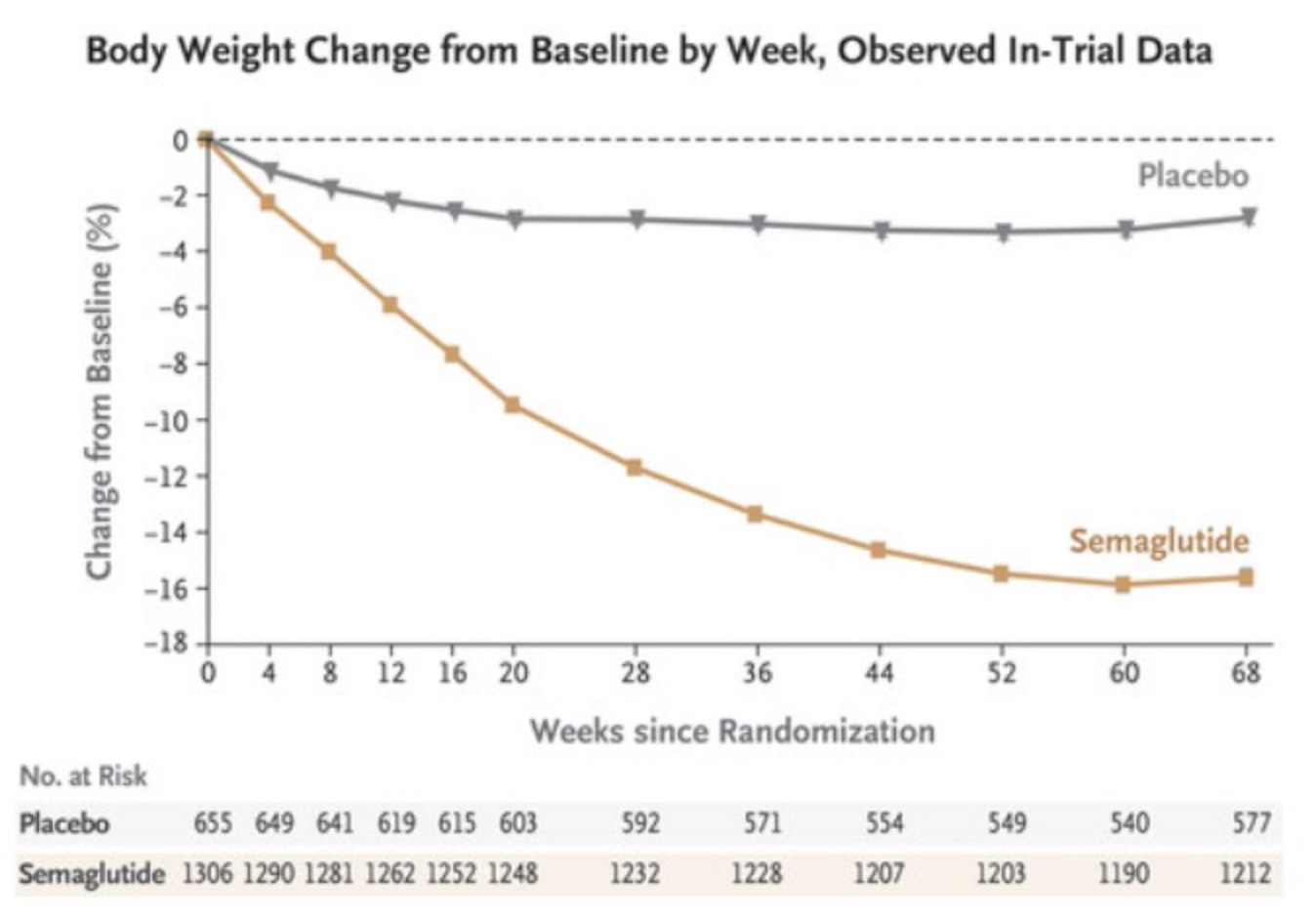

Satiereal supplementation resulted in a modest but statistically significant reduction of body weight after 8 weeks (−0.96 ± 0.26 kg), whereas no effect was noticed in the placebo group (−0.01 ± 1.46 kg). The figure above illustrates the evolving decrease in body weight relative to baseline observed during supplementation. For reference, in the STEP 1 study, by 8 weeks, the placebo group had lost about 2% of their body weight by 8 weeks using the lifestyle intervention only; using the 73.2 kg average mass of the treatment group in the Saffron extract study as a baseline, this corresponds to a weight loss of ~1.46 kg. At that point, the semaglutide group had lost ~4% of their body weight by 8 weeks, here equivalent to ~2.93 kg (with the gap widening as the study went on). Importantly, the change in fat mass in this study was the same for both arms by the end of the study. Admittedly, 8 weeks is not a long enough time period to see substantial changes in body weight- but this speaks to the poor quality of the data on which LEMME is based. Perhaps it was statistically significant (we can measure it with the math), but very likely clinically insignificant or irrelevant.

Weight loss changes for semaglutide

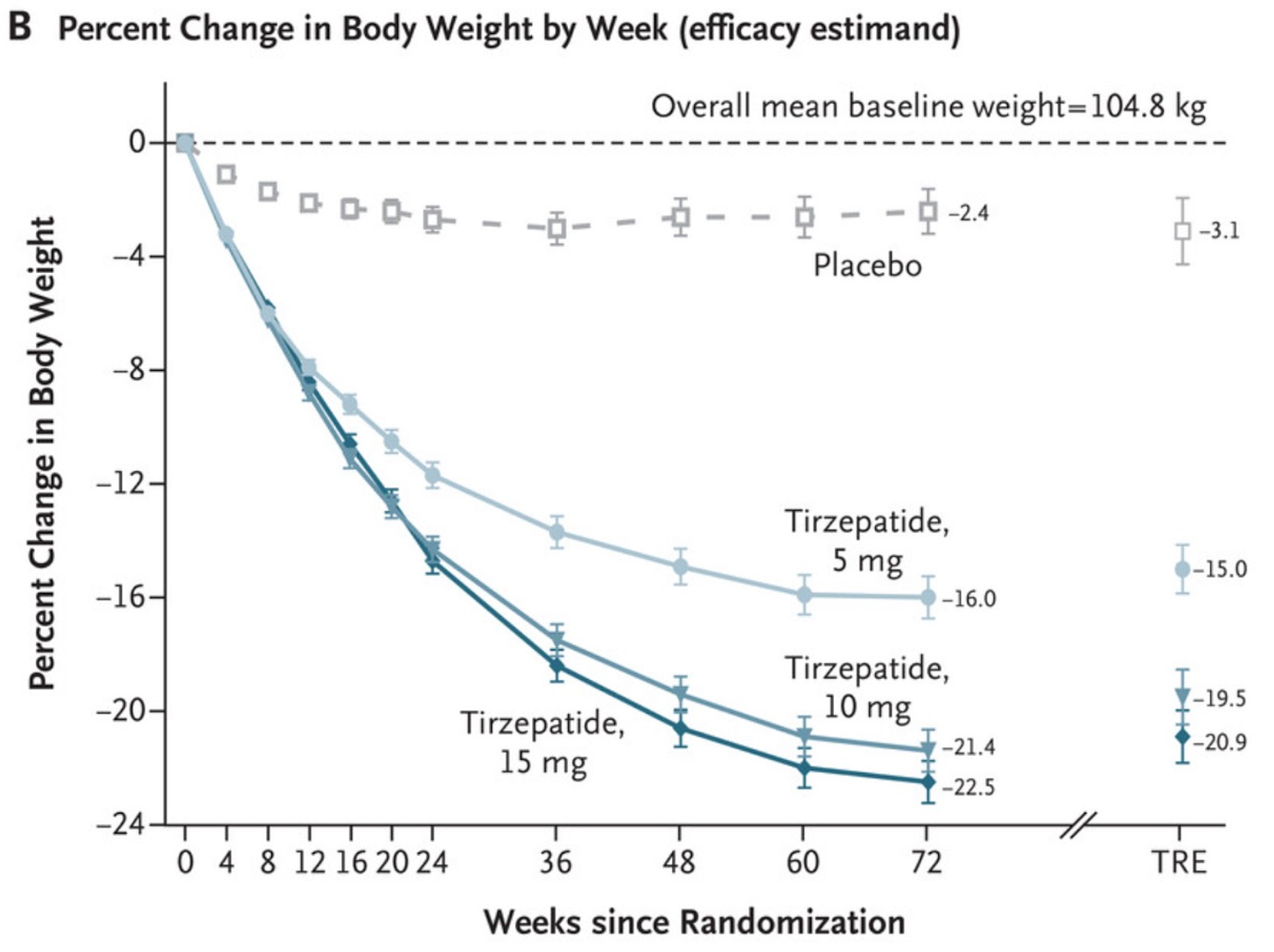

Weight loss change for patients on tirzepatide SURPASS-1 trial

For comparison, plotting the Satiereal weight loss percentage on a similar scale as the studies above shows that the effect was minimal

.The total occurrence of snacking events during the 2-week run-in period was similar in both the placebo and Satiereal groups (361 and 376 events, respectively), and then a significant reduction of snacking frequency relative to baseline was demonstrated with Satiereal from week 4 onward, whereas a transient significant change occurred with placebo at week 6, then slightly increasing at week 8. In a nutshell, those in the placebo group experienced a massive drop in snacking events around week 6 too, bringing into question the validity of the study (placebo effect at play).

Why the Study is Insufficient to Make Claims: A Summary

Small sample size: With only 60 participants completing the study, the statistical power is limited.

Insufficient Duration: Like the first study, the length of duration of the study is too short to draw meaningful conclusions. Even when looking at the studies of GLP-1 analogs, there is minimal weight loss early on.

Minimal Reduction in Weight: The weight loss was insignificant, and fat loss was also not significant. While the weight loss may have been statistically significant, it wasn’t clinically relevant.

Strong placebo effect: The drastic changes in snacking habits in the placebo group around week 6 show a strong placebo effect was at play.

Not reporting CIs: In this study, the authors report the standard error of the mean (SEM) not confidence intervals. When the standard errors overlap, it typically means the confidence intervals also overlap, reinforcing the idea that any difference observed is not statistically significant. While overlapping standard errors suggest a lack of significant difference, this is not definitive proof. Statistical tests, such as a t-test or ANOVA, are still required to formally test for significance, as overlapping standard errors do not account for all aspects of the data (such as sample size or variance). If we were to quickly compute CIs using the following formula (sample size is 31 for Satiereal and 29 for placebo, so a Z-score was used for simplicity here. Note: also used t-score to cross-validate):

Interestingly the CIs do not overlap (which is good), so it is a bit of an anomaly the authors didn’t report them (or perhaps it is that they look too good? It is unclear).

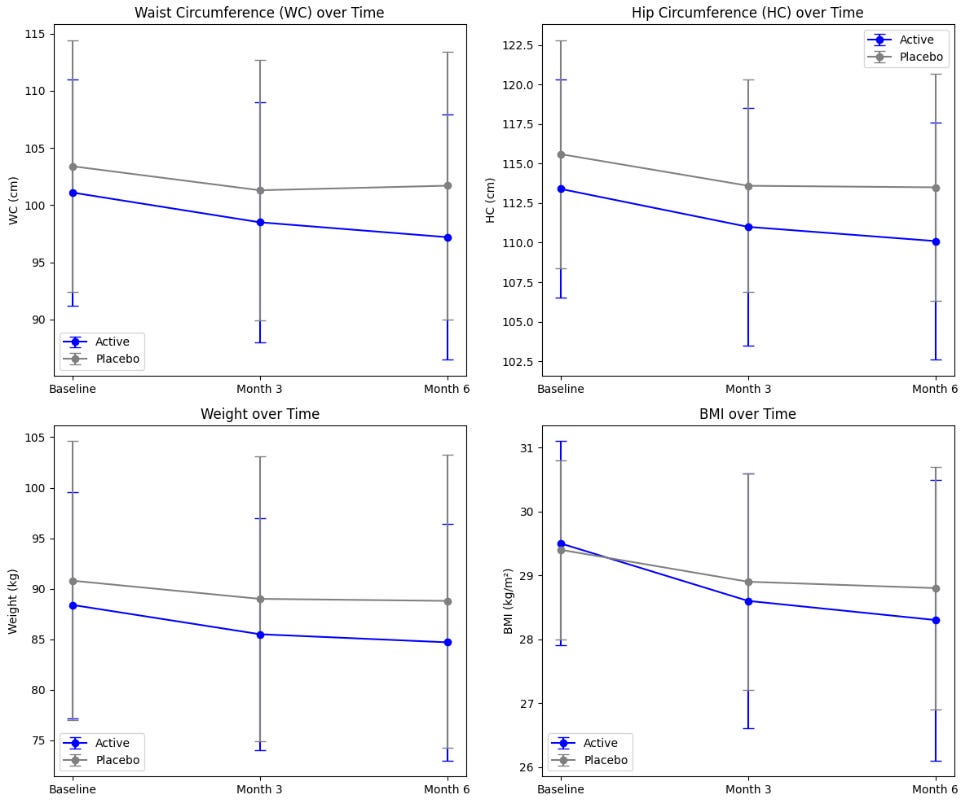

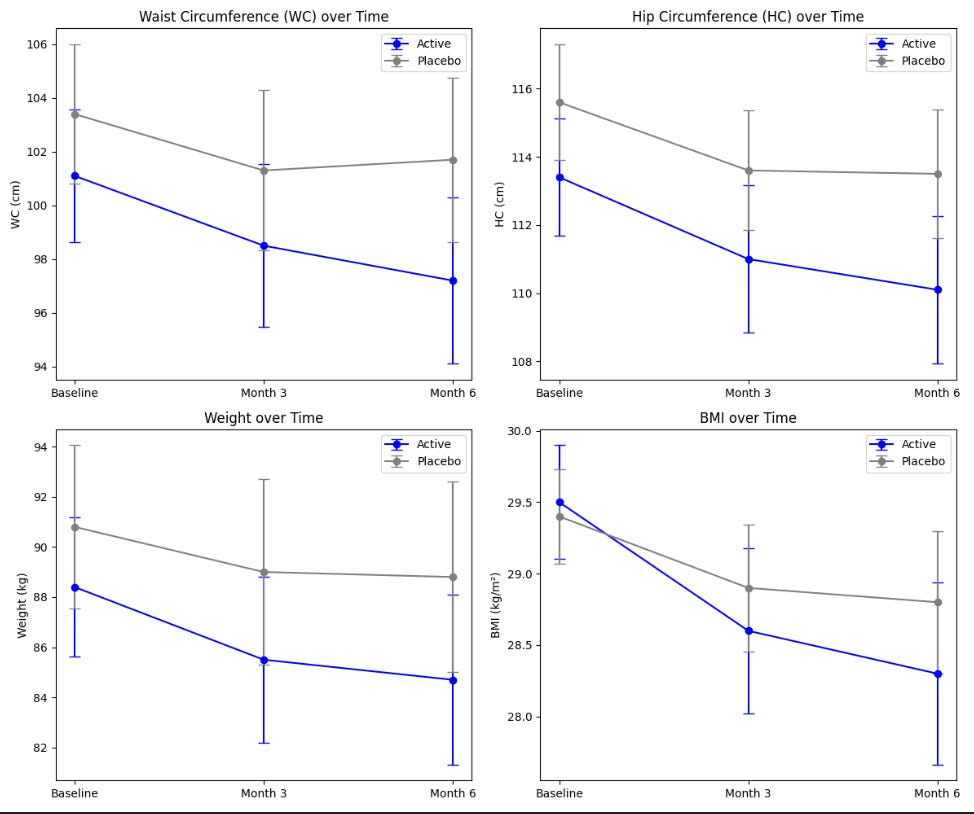

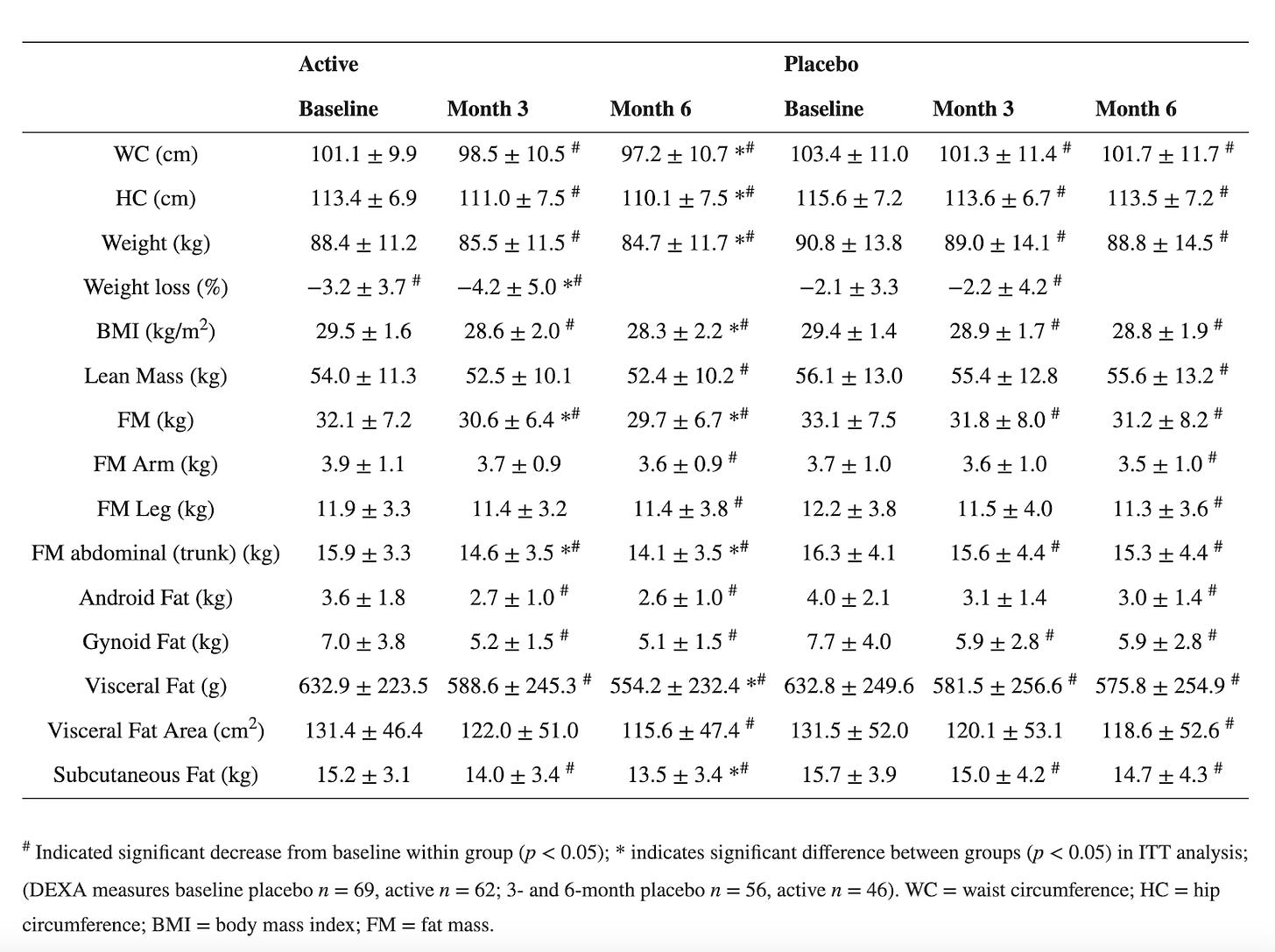

Third claim: Morosil Red Orange Fruit Extract decreased visceral fat and body mass index (BMI) starting at 3 months, in combination with diet and exercise.

There are a couple of studies that suggest benefits for weight loss, but both were funded by the company behind the product, which raises concerns about bias. In fact, the company distributing these ingredients holds exclusive rights to harvest this specific fruit, which could limit impartial research and affect the credibility of the findings. It doesn't necessarily mean results are invalid, it just means the results warrant more scrutiny and should be repeated independently by other agencies. Another point against them is that they are published with MDPI. While not everything published by MDPI is problematic, they have a history of… questionable publishing practices and were on Beall’s list for a time.

Let’s look at one of the papers. This one sought to validate prior results. Once again, it seems like it is not reporting confidence intervals either - at least it is unclear from how they labeled their data. Additionally, the study indicates that significant weight loss may be achieved, but only when combined with a proper diet and regular physical exercise. All participants were given uniform guidance on physical activity, specifically to walk for 30 minutes three times a week and to log all physical activities in their diaries. They were also instructed to follow a calorie-controlled diet and track their daily food intake using an app. The fact that they didn’t have an intervention arm with just the orange extract and without the exercise shows how poorly designed the study was.

One positive note about this study is that they did seem to enroll a good number of participants, at least 180, and they accounted for high drop-off rates. They wound up with 98 participants at the end of the 6-month period.

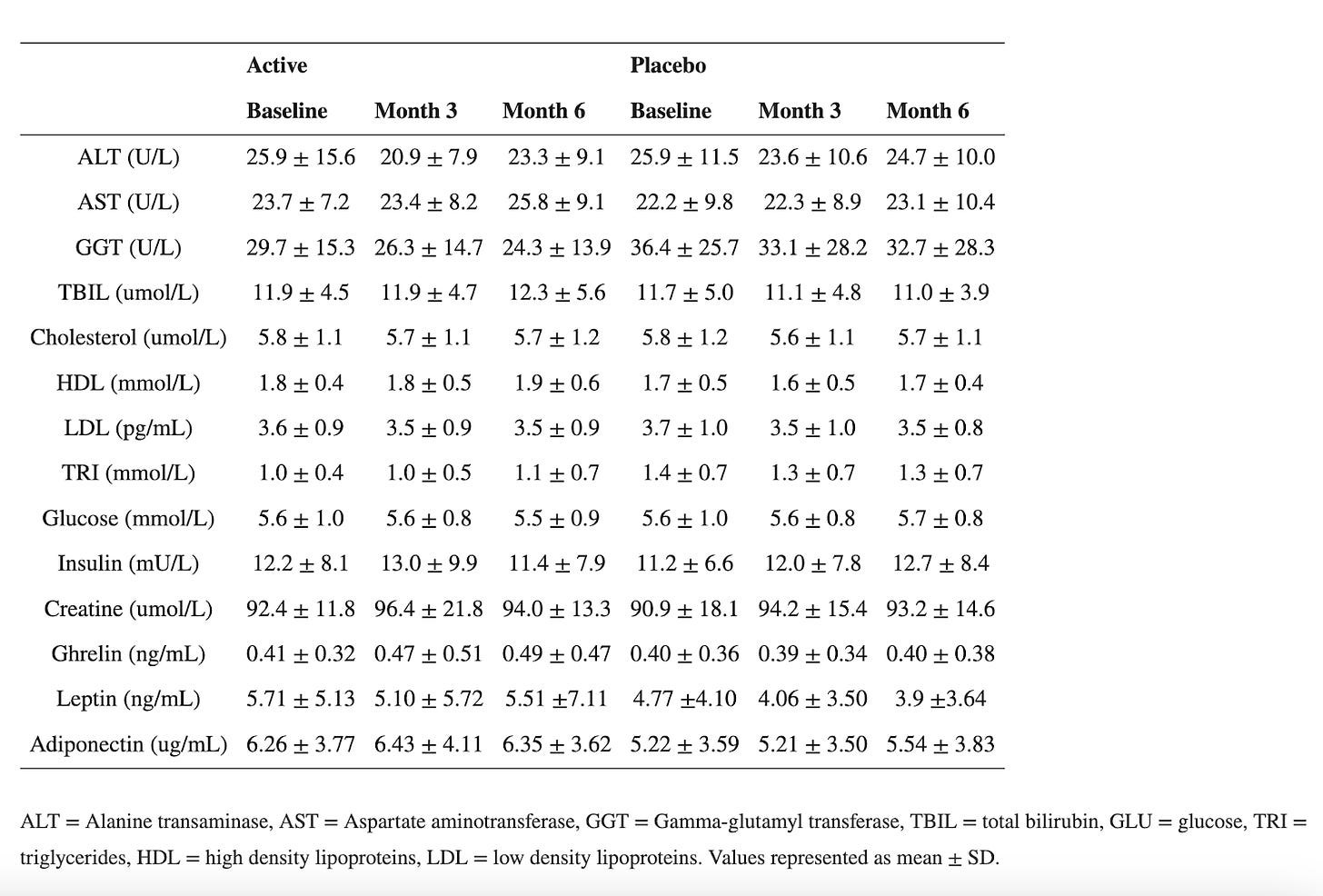

Primary endpoints are plotted below:

Because it is unclear whether they are reporting a standard error or CI, let's compute the confidence intervals for each parameter using the formula using the sample sizes. The sample size for placebo is 69 and for the active is 62 at the baseline, decreasing to 56 and 46, respectively at 3- and 6- months.

In this case, either way, the CIs for the main outcomes overlap drastically, creating major uncertainty around the effect size and significance of the intervention.

Often average data fails to capture meaningful trends. Technically speaking, both the placebo and active group had significant changes in waist/hip circumferences, weight and BMI. Participants on “Moro” orange standardized extract had a mean overall weight loss of 4.2% of starting body weight by month 6, and the placebo group had a mean overall weight loss of 2.2% of starting body weight. Both the active and placebo groups had a significant reduction in waist (3.9 cm vs. 1.7 cm, respectively) and hip (3.4 cm vs. 2.0 cm, respectively) circumference from baseline following 6 months of supplementation. Yes, at first glance this shows promise. However, with the numbers above, for a 150-lb person, adding the orange extract would only result in losing an additional 2% of body weight after 6 months, or an extra 3 lb. Not nothing, but not much. Tirzepatide on the other hand resulted in weight loss of about 12-16% at that point. The change in waist circumference for patients taking once-weekly semaglutide was –13.54 cm after 68 weeks.

Furthermore, 36% of the participants in the active group had a weight loss of more than 5% vs. 22.5% of the participants in the placebo group. Amongst those with BMI between 25 and 30, 35% of the participants in the active group had a weight loss of more than 5% vs. 24% of the participants in the placebo group. This means that even in the placebo group, more than one in 5 had significant weight loss, confirming the uncertainty around the effect size and significance of the intervention.

The study also had a number of exclusion criteria (things that prohibit you from participation in the study). Most of these seem reasonable but participants were excluded if they took any prescription medication other than oral contraceptive pills for women, which is a HUGE chunk of the population. This just means that it is hard to generalize the results to a broader demographic. Outside of BMI>25, participants were otherwise healthy.

Why the Study is Insufficient to Make claims: A Summary

Insufficient Duration: While longer than the other study, it is still much shorter than the data from clinical trials of GLP-1 drugs.

Modest Reduction in Weight: The weight loss was minimal after 6 months.

Strong confounder effect: The control group, who also monitored daily caloric intake and exercised also showed drops in WC, HP, weight and BMI, albeit smaller than those who took the active. This, coupled with the overlapping confidence intervals increases the uncertainty around the effect of the intervention.

Final Verdict

In all, the studies cited to make efficacy claims for GLP-1 daily absolutely fail to constitute strong evidence. As observed, the effects are at best, negligible (or within the noise) and comparable to the placebo groups of other weight-loss pharmaceuticals.

Combining 3 Supplements

Another issue here is that while one could argue that research has occurred on these 3 active ingredients individually (ignoring the glaring problems with the quality of that work), no data has been made available regarding the combination of these agents in a single product. One of the key data gaps has to do with the potential for drug-drug interactions. The fact that these compounds are sourced botanically says nothing about their ability to avoid concerning drug interactions. For example, St. John’s wort is a plant occasionally used for the treatment of depression. However, the list of drugs it can interact with is extensive because compounds within it interfere with multiple enzymes that control the metabolism of other drugs.

The Rule of Three in Study Design

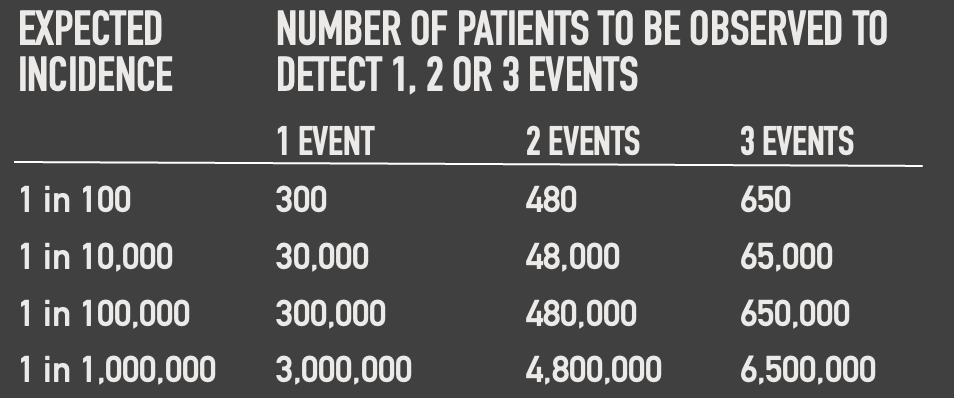

To detect rare adverse events, a large and diverse sample size is necessary. Unfortunately, many supplements and weight loss products on the market are not subjected to the same rigorous testing standards as pharmaceutical drugs, and thus lack the sample sizes needed to detect some adverse events. LEMME’s GLP-1 Daily, likely marketed as a dietary supplement, bypasses the stringent FDA requirements for clinical trials, which pharmaceutical drugs must meet. Consequently, there is little to no data on its safety profile in diverse populations or over the long term.

The "Rule of Three" in clinical trials is a statistical principle used to estimate the upper bound of the probability of an event occurring when no such events have been observed in the trial. This rule is particularly useful in situations where rare events are being monitored, such as adverse effects or rare complications. If no events are observed in a sample of size n, the upper bound of the 95% confidence interval for the true probability of the event is approximately 3/n. In other words, to detect an event that occurs rarely (i.e., does not usually occur without the intervention), you need three times as many subjects as the inverse of the event's frequency.

Example: If a new medication causes a serious adverse reaction in 1 in 1000 cases, you would need to study approximately 3000 subjects to have a 95% chance of detecting at least one case of that adverse reaction.

As you can see, none of these “trials” for the bioflavonoids used in LEMME’s GLP-1 Daily enrolled enough participants to even detect 1 event with 1 in 100 occurrence.

The rigorous clinical trial process for GLP-1 and GIP receptor-based drugs contrasts sharply with this celebrity-promoted supplement. These drugs were tested for over a decade in thousands of participants across various populations to ensure their efficacy and safety. GLP-1 Daily, however, lacks any substantial clinical data or evidence to support its claims.

Moreover, while GLP-1 and GIP receptor agonists require continuous monitoring and careful evaluation for potential risks, GLP-1 bypasses those stringent requirements. This makes its safety and efficacy highly questionable. The absence of rigorous testing, such as that mandated for GLP-1 and GIP receptor agonists, means that rare but potentially serious adverse effects could go undetected until it is too late.

Dietary supplements are not FDA-approved, and thus, do NOT have the research, good manufacturing practices and clinical trial expenses that pharmacological interventions do.

The Catastrophic History of Supplement Deregulation in the United States

The Vitamin-Mineral (Proxmire) Amendment to the Federal Food, Drug, and Cosmetic Act, enacted on April 23, 1976, is the epitome of anti-science legislation. Even though rooted in trying to curb wasteful government spending by Senator William Proxmir, the amendment prohibited the FDA from setting standards for supplements, classifying them as drugs, or requiring that they contain only beneficial ingredients. It also barred the FDA from limiting the quantity or combination of vitamins, minerals, or other ingredients in supplements unless proven unsafe.

The FDA commissioner Alexander Schmidt called it "a charlatan's dream." The amendment led to a boom in the supplement industry and its political influence. Consequently, when U.S. Congress was considering several legislative proposals aimed at expanding the regulatory authority of the FDA in the 1980’s and 1990’s, including the Nutrition Advertising Coordination Act of 1991, which sought to impose stricter rules on the labeling of dietary supplements, many companies within the health food industry launched lobbying efforts to defeat the proposed legislation, warning the public that such measures could lead to an outright ban on dietary supplements by the FDA.

In 1994, the Dietary Supplement Health & Education Act (DSHEA), supported by Senators Orrin Hatch and Tom Harkin, further limited the FDA's oversight, allowing supplements to be marketed without FDA approval and to make unproven claims about their effects on the body. On October 25 of that year, President Bill Clinton put pen to paper, making it law. Notably, Hatch wasn’t just inspired by a passion for health; he also had some generous backing from supplement companies, including multi-level marketing heavyweights like XanGo and Herbalife. This is all made even more problematic considering supplements have no proven benefit for healthy people who have even a remotely balanced diet.

Before the DSHEA, supplements were regulated under the Food, Drug, and Cosmetic Act (FDCA) of 1938, which mandated that food, drugs, cosmetics, and medical devices be proven safe and effective for their intended use.

This doesn’t automatically mean that supplements are all useless or harmful. The problem is the lack of regulation they are subject to means that in most cases we don’t have a great idea of what contexts they are useful or helpful in. On top of that, even if a supplement is reported to contain some substance known to have a medical benefit in some context, this does not guarantee that it is present in the reported quantities (or even at all) or that the supplement itself is not adulterated with potentially dangerous substances that aren’t reported. There is some third-party testing (e.g., Labdoor) that does compare the content of supplements to their labels, but, for the most part, buying supplements in the US is like Forrest Gump’s box of chocolates: you never know what you’re going to get.

Bottom Line

In all, LEMME’s GLP-1 daily supplement rings eerily similar to Glucose Goddess’ Anti-Spike Formula, designed to prevent blood sugar from spiking even though this outcome measure is technically irrelevant in non-diabetics and Pendulum probiotics, also claiming to increase GLP-1. Many people pursue weight loss for aesthetic reasons, influenced by societal pressures and unrealistic images of beauty, often enhanced by cosmetic procedures. However, it's crucial to prioritize our own physical and mental health above these external expectations. Spending money on a supplement that lacks evidence of safety or effectiveness is a disservice to ourselves. We deserve better.

Prescription GLP-1 medications undergo rigorous testing and regulation by health authorities to ensure their safety and effectiveness. They are prescribed with oversight to prevent use in those unlikely to benefit. In contrast, over-the-counter supplements like GLP-1 Daily lack the same stringent review, raising concerns about efficacy, potential side effects, and dosage accuracy. Without thorough clinical studies, it’s hard to assess their performance against prescription alternatives, and available data suggests any effect is minimal, if not negligible. More critically, limited data makes it difficult to track potential adverse events, despite the likelihood of their occurrence. Between 2004 and 2013, the U.S. Food and Drug Administration (FDA) received over 15,000 reports of health problems linked to dietary supplements, including 339 deaths and nearly 4,000 hospitalizations. Efforts to pass legislation aimed at regulating the dietary supplement industry, such as the Dietary Supplement Listing Act of 2022, have repeatedly been unsuccessful, likely because the U.S. dietary supplement market is valued at approximately $53.58 billion (2023) with more than 80,000 in the market since DSHEA was passed.

Pharmaceutical companies often engage in unfair pricing practices that impose a heavy financial burden on consumers, and they should be held accountable for these actions. However, it is fair to say that supplement companies should also be held to the same rigorous safety and efficacy standards as pharmaceutical companies to ensure public health protection. There shouldn’t be double standards here.

Footnotes*

Byetta (Exenatide):

Phase II Trial: Involved over 100 subjects with poorly controlled type 2 diabetes. Exenatide significantly reduced HbA1c levels when added to ongoing oral medication, surpassing standard medications and placebo.

Phase III Trials: Three large-scale, triple and double-blind, placebo-controlled studies with 1,446 patients who had inadequate glycemic control on oral medications (metformin, sulfonylureas, or both). These 30-week trials showed an average HbA1c reduction of 1% with the highest 10mg twice-daily dose, and about 40% of patients achieved HbA1c levels of 7% or less. Patients also experienced significant weight loss, maintained in long-term open-label extensions for up to a year.

Liraglutide (Victoza and Saxenda):

LEAD Program: A series of six Phase III randomized clinical trials involving thousands of participants compared different doses of liraglutide (0.6, 1.2, 1.8 mg/day) to placebos and other treatments like glimepiride, rosiglitazone, and insulin glargine. Results showed substantial improvements in glycemic control (HbA1c reduction) and weight loss. 4,456 participants across all six LEAD Phase 3 trials.

SCALE Trials (Saxenda, Higher-Dose Liraglutide): A 56-week, double-blind, placebo-controlled trial with over 5,000 overweight or obese participants, including 635 with type 2 diabetes. Liraglutide 3.0 mg achieved significantly greater weight loss than placebo, with 52% of participants achieving at least 5% weight loss, compared to 24% with placebo and maintaining it for up to three years in long-term studies.

LEADER Trial: A major cardiovascular outcomes trial involving over 9,300 high-risk patients with type 2 diabetes. It demonstrated that liraglutide significantly reduced the risk of cardiovascular death, nonfatal heart attack, and stroke compared to placebo, while also lowering all-cause mortality. Follow up ws for 3.8 years on average.

Semaglutide (Ozempic):

In June 2008, a phase II clinical trial began studying semaglutide, a once-weekly diabetes therapy as a longer-acting alternative to liraglutide.It was given the brand name Ozempic. Clinical trials started in January 2016 and ended in May 2017. The FDA approved Ozempic based on data from seven clinical trials (SUSTAIN) involving 4,087 patients with type 2 diabetes. Additionally, the FDA considered results from a separate trial of 3,297 type 2 diabetes patients who were at high risk for cardiovascular events. In patients with type 2 diabetes, treatment with OZEMPIC can lower HbA1c (hemoglobin A1c), which is a measure of blood sugar control.

SUSTAIN 1 387 participants; compared semaglutide to placebo in patients with type 2 diabetes.

SUSTAIN 2 1225 participants; assessed the efficacy and safety of semaglutide versus the dipeptidyl peptidase-4 (DPP-4) inhibitor sitagliptin in patients with type 2 diabetes inadequately controlled on metformin, thiazolidinediones, or both.

SUSTAIN 3 813 participants; compared once-weekly semaglutide 1.0 mg s.c. with exenatide extended release (ER) 2.0 mg s.c. in subjects with type 2 diabetes

SUSTAIN 4 1082 participants; assessed semaglutide compared with insulin glargine in patients with type 2 diabetes who were inadequately controlled with metformin (with or without sulfonylureas).

SUSTAIN 5 397 participants; assess semaglutide vs placebo on glycemic control as an add-on to basal insulin in patients with T2D.

SUSTAIN 6 3297 participants; assessed semaglutide in adults with type 2 diabetes who were on basal insulin with or without metformin.

SUSTAIN 6: 3,297 participants; a cardiovascular outcomes trial that assessed the cardiovascular safety of semaglutide in adults with type 2 diabetes at high cardiovascular risk.

SUSTAIN 7: 1,201 participants; compared two doses of semaglutide (0.5 mg and 1.0 mg) with two doses of dulaglutide (0.75 mg and 1.5 mg) in adults with type 2 diabetes.

SUSTAIN 8: 788 participants; compared semaglutide with canagliflozin (Invokana) in adults with type 2 diabetes on metformin.

SUSTAIN 9: 302 participants; assessed semaglutide in adults with type 2 diabetes on basal insulin.

SUSTAIN 10: 577 participants; compared semaglutide with liraglutide (Victoza) in adults with type 2 diabetes.

SUSTAIN 11: 505 participants; evaluated semaglutide in a population with type 2 diabetes in a real-world setting.

Higher-dose semaglutide (Wegovy):

The STEP trials started in March 2021 to examine its effectiveness for obesity. The treatment with once-weekly subcutaneous semaglutide or placebo, plus lifestyle intervention, was evaluated. One of the trials examined the combined effect of the drug with intensive behavioral therapy in 611 adults with obesity or overweight. The results indicated significant weight loss benefits for participants receiving semaglutide in addition to behavioral therapy, compared to placebo with the same behavioral intervention. STEP 4 Trials was a withdrawal trial that involved 803 participants who first underwent a 20-week run-in period on semaglutide to reach the target dose. Those who continued on semaglutide lost an additional 7.9% of their body weight over 48 weeks, while those who switched to placebo regained 6.9% of their weight.

STEP-1: 1961 participants; Evaluated semaglutide’s ability to promote weight loss as an adjunct to lifestyle intervention.

STEP-2: 1210 participants; Evaluated semaglutide 2.4 mg versus semaglutide 1.0 mg (the dose approved for diabetes treatment) and placebo for weight management in adults with overweight or obesity, and type 2 diabetes.

STEP-3: 611 participants; Evaluated semaglutide 2.4 mg as an adjunct to intensive behavioral therapy (IBT) vs placebo, in subjects overweight or with obesity

STEP-4: 902 participants. Evaluated once-weekly subcutaneous semaglutide during run-in. After 20 weeks (16 weeks of dose escalation; 4 weeks of maintenance dose), 803 participants (89.0%) who reached the 2.4-mg/wk semaglutide maintenance dose were randomized (2:1) to 48 weeks of continued subcutaneous semaglutide (n = 535) or switched to placebo (n = 268), plus lifestyle intervention in both groups.

STEP-5: 304 participants. Assessed the efficacy and safety of once-weekly subcutaneous semaglutide 2.4 mg versus placebo (both plus behavioral intervention) for long-term treatment of obese or overweight adults

Mounjaro

GIP receptor agonists, often combined with GLP-1 receptor agonists (as in the dual agonist tirzepatide, approved under the brand name Mounjaro in 2022), went through similar extensive development and trials. Researchers needed to establish their combined efficacy and safety profile over existing single-agonist treatments. For instance, tirzepatide's approval was based on results from the SURPASS and SURMOUNT clinical trial programs, which included over 25,000 patients across multiple studies.

Eriomin® Lemon Fruit Extract Study Results

Results for Satiereal intervention

Results for orange extract (Morosil) intervention