In Uvalde, The Myth of a “Good Guy with a Gun” was Vividly Disproven

A case of shoddy statistics

Para una versión en Español, oprima aqui.

Cliff Notes: This post reviews the debate around defensive gun use, debunking the myth that guns are used defensively over 2 million times a year. It delves into the methodological flaws pointed out by Hemenway in studies by researchers like Kleck and challenges the effectiveness of guns in saving lives or reducing crime. The critique extends to John Lott's work, pointing out flaws and inconsistencies in his research, concluding that the focus should be on comprehensive policies to address crime rather than relying on armed civilians.

A new Justice Department report identified and condemned catastrophic law enforcement failures in the response to an active shooter at the Uvalde, Texas elementary school on May 24, 2022, citing delayed action, lack of urgency in establishing a command post, and dissemination of inaccurate information to grieving families. The report highlights that training, communication, leadership, and technology problems prolonged the crisis, leading to further loss of lives of people who would have survived had protocol been followed.

After school shootings, gun advocates often revive the proposal to arm more teachers and civilians. However, considering that 376 trained law enforcement personnel were unable to prevent the continued attack on innocent children, the notion that armed civilians, particularly teachers, could have successfully intervened seems rather impractical and ludicrous to say the least. We have always known that the idea that a good guy with a gun stops a bad guy with one is fiction, and at best a dishonest misrepresentation of shoddy statistics. It was David Hemenway, Professor of Health Policy and Director of the Harvard Injury Control Research Center who paved the way to deconstruct the notion of a good guy with a gun. I summarize some of his work here.

According to the Pew Research Center, 72 percent of gun owners in 2023 say they own a gun for protection, with a substantial majority of 81 percent expressing that they feel a heightened sense of safety when in possession of a gun. Amongst most, there is an underlying assumption that guns stop criminals and can keep people safer. But this is not supported by the case data we have from criminal records, or basic math for that matter.

Gun rights advocates usually tout the NRA-backed myth that guns are used defensively “over 2 million times every year—five times more frequently than the 430,000 times guns were used to commit crimes.” This erroneous figure has been widely misused to obstruct the enactment of sensible gun laws. Let’s TECH it apart.

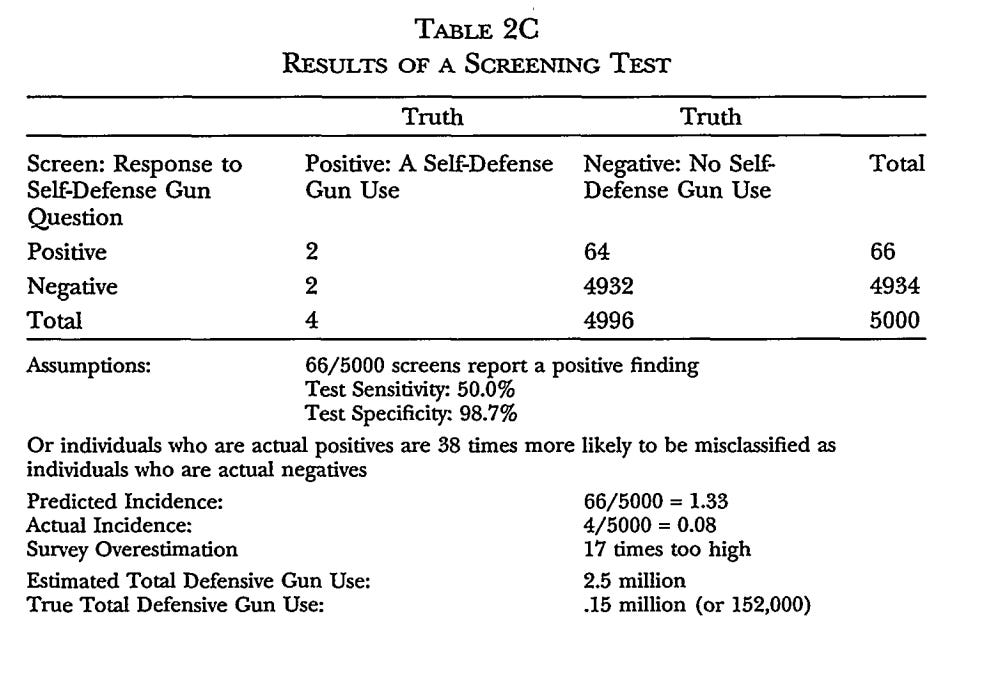

In 1992 criminologists at Florida State University, conducted a random survey to determine the annual number of defensive gun uses in the US. They surveyed 5,000 individuals, asking them if they had used a firearm in self-defense in the past year and, if so, for what reason and to what effect.

66 incidences of defensive gun use were reported, roughly over 1%. The researchers then extrapolated their findings to the entire U.S. adult population (200M), resulting in an estimated 2.5 million defensive gun uses per year.

In the survey, 34% of the time a gun was used for self-defense during a burglary. Therefore, guns were reportedly used by defenders for self-defense in ~845,000 burglaries. But that doesn’t add up. Here is why:

In 1991 fewer than 6 million burglaries were committed in the US. This number accounts for underreporting. There were roughly 94 million households in the US at that time.

According to the NCVS, in only 23.6% of those cases was someone at home (1.2-1.3 million). Hemenway sets the figure at 22% - it is possible that not all victims who were sleeping were doing so in their homes. About 15.4% of the respondents didn’t have a recollection of their whereabouts at the time of the burglaries.

42% of U.S. households owned firearms in 1991. That is, there were ~546,000 of households where someone was home during the time of the incident.

The victims in two-thirds of the occupied dwellings were asleep, further reducing the potential number of self-defensive firearm encounters to 182,000.

This all means that for the 2.5 million to pencil, burglary victims would have to have used their guns in self-defense more than 100% of the time -in fact, they would have had to use their guns roughly 3-5 times each, which is VERY unlikely. Even if we were to assume that the share of respondents without recollection of their whereabouts was home and awake (so a total of 39% of respondents were home or ~2M and roughly three-fifths awake or ~500,000 awake gun owners ), it would imply they used their guns more than 100% of the time. It is reasonable to think that some of Kleck’s respondents might have classified motor vehicle theft as burglaries in their responses. But even then, under extreme assumptions, the same conclusions would still hold.

The “false positive” problem for rare events

Hemenway continued to explain the problem when attempting to measure the occurrence of a rare event; one has to be especially careful about misclassification (i.e., calling something that is false true, or calling something that is true false). Random misclassification can result in significant overestimation, even when the actual rate of misclassification is low. This phenomenon is a statistical consequence of no test being perfect, particularly those susceptible to recall and self-presentation biases. This is also why approaches to diagnostic screening in medicine are so fraught: a test might be very good at giving true positives, but it might come at the cost of most positive results actually being false.

To understand this concept we need to know a little bit of vocabulary though. Any given test has a set sensitivity and specificity.

The sensitivity of a test describes its ability to flag a positive result if the thing being tested for is present. A test that has 99% sensitivity will give a positive result in 99 out of 100 instances, assuming the thing being tested for is present for all 100. It also means that 1 out of 100 will be falsely classified as negative, despite being positive.

The specificity of a test describes its ability to yield a negative result when the thing being tested for is not present. For example, a 99% specific test will give a negative result in 99 out of 100 instances when the thing being tested for is not present in that sample of 100. This implies that 1 out of 100 will be falsely classified as positive, despite being negative.

The positive predictive value (PPV) just looks at the positive cases and indicates the percentage of individuals with a positive test outcome who indeed possess the relevant outcome (are positive). The negative predictive value (NPV) looks at negative cases and represents the proportion of individuals with a negative test result who are genuinely free of the condition or are negative. These are not quite the same thing, however, as the true positive and true negative rates, respectively. Here’s why:

Assume that the actual incidence of gun use in self-defense in the population is 0.1%. So in a survey of 1000 random people, 1 would be expected to have used a gun in self-defense. What does that mean for the other 999? It means they all have a chance to be classified as a false positive. Yet only one person can be misclassified as a false negative. Because we are trying to estimate a rare event - even a small percentage bias can lead to a large overestimate.

A 0.1% true incidence means 999 respondents can be misclassified as false positive amongst 1000 survey respondents

Say that survey findings are a 1% overestimate of the true incidence. If the true incidence was 20%, going from 20 to 21% is minor. But if the true incidence is 0.1%, going to 1.1% means you are overestimating by 11X.

So let’s go back to that survey conducted by Kleck. If only 6 correspondents used a gun the incidence rate is truly ~0.1% (240,000 times). However, if 60 people were misclassified, likely due to recall bias, you are getting more than 10-fold misclassification or ~2.5M when extrapolated. This goes back to a fundamental point about statistics: even with a highly specific test if the thing being tested for is extremely rare, most of the positive results produced will probably be false positives.

Misclassification of 1% in Kleck’s work would result in 11X overestimation

Hemenway presented various scenarios altering the specificity and sensitivity of Kleck's survey to illustrate that Kleck's methodology is highly responsive to tiny variations in the specificity rate.

Hemenway presents a sensitivity analysis, using three distinct scenarios, of how false positives can lead to huge overestimates in his work “Survey research and self-defense gun use: An explanation of extreme overestimates. Journal of Criminal Law and Criminology. 1997; 87:1430-1445”

Those who wield guns frequently assert self-defense, regardless of whether the case facts align with such claims. This self-presentation bias, the likelihood of positive social desirability, can introduce unreliability into the data as well. A person who acquires a firearm for self-defense and effectively utilizes it to repel a criminal is demonstrating the prudence of their precautions and their ability to safeguard themselves, their loved ones, and their belongings.

Furthermore, the 2.5 million approximation relies on individuals rather than households. However, the survey is randomized based on dwelling units, not individuals, preventing a direct extrapolation to the entire national population. Individuals who are the sole adults in a household are likely overestimated. Also, the surveyors tried to speak to the male head of the household and deliberately included a higher proportion of males and individuals from the South and West which does not yield a representative sample of the population either. The presented data is weighted, not actual, yet the authors fail to clarify their weighting methodology.

Looking at case reports

According to surveys conducted by the Harvard Injury Control Research Center, criminal court judges often deemed self-defense gun use illegal, even when individuals had the legal right to own and carry a firearm, raising doubts about its use by law-abiding citizens for self-defense. Another survey revealed that firearms are more frequently employed for intimidation than for self-defense. Guns in households are more likely used to intimidate residents than to prevent crimes, with other weapons being more commonly employed against intruders than guns. Data from a telephone survey involving 5,800 California adolescents aged 12-17 indicated a higher likelihood of being threatened with a gun than using one for self-defense. Moreover, most reported instances of self-defense gun use among adolescents involved hostile interactions between armed individuals.

Lastly, looking at data from wounded detainees in Washington jails confirmed that very few of them had been injured by law-abiding citizens. Most were shot during robberies and assaults or when they were stuck in the crossfire. Also, unlike the movies, criminals seek medical care when shot. If that 2.5 million self-defense figure were to hold, there would have to be hundreds of thousands of criminals shot in hospitals. Still, total figures for gunshot victims are in the tens of thousands and they are more likely to include victims of suicides and accidental injuries.

Kleck’s Counter Response

Naturally, Kleck countered Hemenway’s critique, suggesting it was politically motivated to downplay the estimate of self-defense gun use to advance gun control legislation and accused Hemenway of having connections with Handgun Control Inc. Hemenway clarified that he aimed to bring more scientific rigor into firearm scholarship and address the issue of false positives in survey research, denying direct ties with HCI.

Kleck questions why Hemenway did not mention the National Institute of Justice (NIJ) survey, and Hemenway explains that the NIJ survey results were unavailable when he submitted his article, and Kleck's threat to sue caused delays in publication.

In response to Hemenway pointing out the methodological issues or limitations of their survey, Kleck proceeds with accusations of libel writing that "The unmistakable innuendo is that some of our interviewers faked or altered interviews to create phony accounts of defensive gun uses." (p. 1459). In reality, Hemenway wasn’t alluding to falsification, but rather the principle of "blinding" in survey methodology, emphasizing the importance of data collectors being unaware of anticipated results to prevent bias. This concern is particularly evident in the Kleck-Gertz paper, where the head of the firm that conducted the survey, affiliated with the same institution as the principal investigator, co-authored the publication. The survey, named "National Self-Defense Survey," lacked blinding, potentially influencing higher estimates compared to similar one-shot surveys.

Kleck further refutes the notion his results are large overestimates and criticizes Hemenway's claim that the results are extremely sensitive to the specificity rate, further suggesting Hemenway is assuming his conclusion: extremely low actual defensive gun use rates. Hemenway argues that he used Kleck’s figure of 1.33% of self-defense gun use (about 66/5000), rather than his conclusion about the rate, and then examined what the true rate of self-defense gun use would be under various assumptions about the specificity and sensitivity rates. Under most reasonable assumptions, their 2.5 million is a wild overestimate.

However, Kleck contends that the 2.5 million estimate is a conservative one because the test tends to produce numerous false negatives (instances where individuals deny using a gun in self-defense when they did). In making this argument, Kleck posits an uncommon scenario where the test exhibits both an exceptionally high specificity rate and an exceptionally low sensitivity rate. To support these extreme rates, Kleck emphasizes that self-defensive gun use is more often viewed as negative, asserting that the 'social desirability response bias' does not distort the results. He repeatedly states that "most of the reported defensive gun uses involved illegal behavior" (p. 1455) and that asking about self-defense gun use is equivalent to "requiring respondents to report their own illegal behavior" (1458)(1447), making them hesitant to disclose an unlawful self-defense gun use out of fear that it might lead to legal consequences.

First, Hemenway points out that the social desirability bias doesn't have to be predominant for most people given the rare nature of the event and that the available evidence strongly suggests that the majority of people perceive self-defense gun use as socially beneficial, and often heroic. In the Kleck-Gertz survey, over 46% of respondents assert that their use of a gun might have saved someone’s life, indicating a perception of heroism. If these claims are accurate, self-defense gun use could have prevented over 1 million murders annually, despite the total number of murders in 1994 being much lower (27,000). Second, Kleck offers no evidence about the legality of self-defense gun use in his research, explicitly stating that they did not evaluate the lawfulness or morality of participants' defensive actions (Kleck & Gertz, p. 163). However, the deliberate oversampling of men in regions with high gun ownership and lenient carrying laws, such as the West and the South, raises concerns about the alleged illegality of these actions. Third, participants were not instructed to reveal any unlawful aspects of their conduct, and neither the National Crime Victimization Survey (NCVS) nor one-shot interviews have employed the obtained information to single out or penalize individuals for engaging in illegal activities.

Kleck suggests that a significant number of less severe gunshot wounds go untreated in emergency rooms, and he asserts that only a small fraction of wounded criminals seek medical attention. However, this claim lacks supporting evidence, and medical professionals find it implausible, as there is no indication of widespread untreated gunshot wounds leading to infection or sepsis.

Kleck contends that it is impossible to validate his 2.5 million figure by comparing it to other results, dismissing the reliability of the National Crime Victimization Surveys (NCVS), which show much lower incidences of self-defensive gun use (see section below). He argues that defensive gun use involves criminal behavior (not a good argument to loosen gun restrictions), making victims hesitant to report incidents to the NCVS. Hemenway reasserts that the NCVS is considered a reliable source for serious crime estimates, and Kleck himself has acknowledged its careful refinement. Kleck's reliance on a smaller survey, which lacks the sophistication of the NCVS, raises questions about the credibility of his robbery victimization estimates compared to the NCVS figures.

Additionally, Kleck shifts from asserting that most respondents deliberately lied about self-defense gun use to surveyors to now claiming that the majority don't report the incidents at all in the NCVS. Even if these claims were accurate, his figures for self-defense gun use during burglaries would still suggest an unrealistically high success rate in cases of occupied homes being burglarized.

According to Kleck, over 1,000 respondents in the National Crime Victimization Survey should have reported self-defense gun use (more than 90,000 interviewed), but only a fraction do (to be exact, 34), implying widespread deliberate lying or non-reporting, a pattern inconsistent with the survey's design. Even though he argues that respondents hide gun use due to perceived negativity and illegality, this overlooks the survey's confidentiality, lack of inquiry into legality, and absence of reported punishments. It also is rather inconsistent with the claim that most gun owners bought their guns for self-protection, and respondents claim, almost half the time, that their gun use might have saved an innocent life (something positive). If Kleck's figures were accurate, gun-owning citizens should be significantly safer from homicide, but case-control studies indicate the opposite.

So how often are guns used in self-defense?

The National Crime Victimization Survey (NCVS), which surveys crime victims biannually, typically registers notably fewer occurrences of defensive gun use, despite polling a larger pool of respondents. According to the firearms violence report from April 2022, 2% of victims of nonfatal violent crimes (including rape, sexual assault, robbery, and aggravated assault) and 1% of property crime victims resort to using guns in self-defense. The survey reveals that between 2014 and 2018, guns were defensively employed in 166,900 nonfatal violent crimes, averaging 33,380 per year. Similarly, defensive gun use was reported in 183,300 property crimes over the same period, averaging 36,660 per year. In total, this amounts to 70,040 instances of defensive gun use annually.

In 2015, Hemenway examined five years' worth of NCVS data and determined that instances of self-defensive gun use were infrequent, accounting for less than 1% of all crimes occurring in the presence of a victim. Unlike Kleck's data from the general population, Hemenway conducted interviews with individuals who had experienced actual crime situations. This research also revealed that seeking assistance was as effective as resorting to the use of a gun to prevent an injury.

Examining the demographics, the nature of incidents, their locations, and the underlying causes of gun violence provides insights into the validity of the self-defense argument. The majority of Americans holding concealed carry permits are white men residing in rural areas, yet it is young black men in urban settings who disproportionately face violence. Furthermore, violent crimes tend to be conglomerated in specific geographical areas. Between 1980 and 2008, half of all gun violence incidents in Boston occurred in just 3 percent of the city. Similarly, in Seattle over 14 years, every juvenile crime incident occurred on less than 5 percent of street segments. This implies that individuals carrying guns have a limited likelihood of encountering situations where they could legitimately use them for self-defense.

Some have argued the NCVS underestimates defensive gun use. The 2021 Firearms Survey found 1.67 million instances of defensive gun use, but then again, its author, William English, does state that “the most authoritative resource for estimating defensive gun use in the U.S. has been the “National Self-Defense Survey” conducted by Kleck and Gertz in 1993”. Go figure. This survey is subject to the same limitations as Kleck’s, despite claiming it uses a more sophisticated methodology of questioning respondents.

How effective are guns in saving lives or reducing crime for that matter?

Despite the wide potential range of the incidence of gun use for self-defense, one thing Kleck and Hemenway can agree on is that none of these studies can accurately pinpoint how effective guns are in saving lives or thwarting crime. Most experts in the field conclude they are rather ineffective, despite the assertions of NRA’s darling, John Lott. The most comprehensive critique of Lott's work can be found here, and I attempt to summarize it below, alongside other practical resources.

Almost every statistic used by Republican congressmen, gun lobbyists, and the NRA is rooted in his research. His findings assert that guns enhance American safety and that imposing restrictions on firearms makes America more dangerous. In collaboration with David Mustard from the University of Chicago, he asserted that the implementation of "shall-issue" concealed carry laws resulted in a decrease in rapes and killings. Their argument posited that if all states had adopted such laws by 1992, there would have been substantial reductions in crime, further asserting such laws are the most cost-effective way of reducing crime. Additionally, he contended that mass shooters target gun-free zones and falsely asserted that the FBI overlooked numerous instances of active shootings that were supposedly halted by defensive gun use.

Shall issue" implies that once an applicant meets the fundamental criteria established by state law, the issuing authority (which could be the county sheriff, police department, state police, etc.) is obligated to grant a permit.

Computer Scientist Tim Lambert critiqued Lott's work, pointing out multiple flaws and disputing the assertion of a rise in gun ownership during that time frame. Lambert highlighted Lott's cherry-picking, honing on only two random surveys on gun ownership between 1977 and 1992, while disregarding numerous others and inadequately addressing survey limitations, which included self-presentation bias, overestimates, sampling errors, variations in question-wording, and individuals' reluctance to respond truthfully to questions.

Lott countered that more people carried guns in public after the enactment of shall-issue laws, but this was factually baseless. Surveys indicate that 5-11% of US adults already carried guns for self-protection before concealed carry laws were implemented, making it highly unlikely that the 1% of the population identified by Lott, who obtained concealed carry permits after the passage of "shall-issue" laws, could be solely responsible for the observed decrease in crime. Lambert points out that many permit holders were likely already carrying firearms illegally, making "shall-issue" laws minimally impactful on gun ownership. Lott's weak explanation, suggesting that the 1% had a higher risk of encountering crime also contradicts empirical evidence. Studies show that areas with high crime pre-law had fewer new permits. Dade County police records also reveal rare instances of defensive gun use by permit holders. Lott's selective use of evidence raises doubts about the true impact of concealed carry laws on crime and even earned criticism from criminologist Gary Kleck, author of the 2.5 million annual self-defense gun use figure cited above.

Critics also point out numerous bizarre and inconsistent findings in Lott's work that defy established criminological facts. For instance, his assertion that rural areas are more dangerous than cities contradicts FBI data. Lott's model proposes that increasing unemployment and reducing middle-aged and elderly black women would significantly decrease the homicide rate, conclusions considered so unusual that they cast uncertainty over the validity of the entire study. Despite middle-aged black women rarely being involved in homicide, Lott's results suggest a 1% decrease in their population leads to a 59% drop in homicide (and a 74% increase in rape).

Lott's data indicates a weak deterrent effect on robberies, further casting doubt on the notion that armed law-abiding citizens could deter crime at all. Interestingly, concealed-carry laws supposedly reduce murder and rape but elevate property crime rates, with Lott attributing this to criminals opting for property crimes to avoid armed civilians. Rightfully, skeptics have flagged the validity of this explanation, questioning whether auto theft can genuinely substitute for rape and murder.

Lott's model also lacks consideration for crime waves, omitting crucial variables like gangs, drug consumption, and community policing. Attempts to correct this absence using a linear time trend are criticized for incorrectly assuming perpetual crime increases. Slight model changes to Lott’s work yield vastly different results, and essential crime controls are blatantly excluded from Lott’s work.

In fact, two econometricians, Dan Black and Daniel Nagin further proved this. By making minor adjustments to Lott's statistical models or applying Lott's methods to a different set of data, they generated completely different outcomes.

Zimring and Hawkins highlighted that "shall-issue" laws were mainly implemented in NRA-influenced regions like the South, West, and rural areas, minimizing their impact on the broader social landscape. They argued that the spike in homicide rates in major eastern cities during the 1980s and 1990s was more likely linked to the crack epidemic.

A significant drawback of Lott's paper is its lack of predictive power, making it essentially useless. Researchers tested Lott's model with new data from 14 additional jurisdictions adopting concealed carry laws between 1992 and 1996. According to Lott's own model, these areas experienced increased crime across all categories.

In response to the growing controversy over gun violence and particularly Right-to-Carry (RTC) laws, the National Research Council (NRC) convened a panel of 16 experts to examine the existing literature. Their findings concluded 15-1 that the current evidence does not support Lott’s claim that RTC laws reduce crime. John Donahue from Stanford followed up and improved upon the work from the NRC, further concluding the same: these laws don’t reduce crime and are potentially harmful.

Lott and the NRA like to highlight that more studies appear to support the idea that Right-to-Carry (RTC) laws reduce crime than oppose it. However, this is only after selectively omitting at least two studies and, depending on the criteria, possibly more than seven that contradict their stance. Additionally, studies included on his side have been thoroughly discredited.

Another one of John Lott’s most egregious assertions is that most public mass shootings in the United States (94-98%) since at least 1950 have taken place where citizens were banned from carrying guns, or gun-free zones, and that RTC laws are linked to substantial decreases in both the occurrence of public mass shootings and the overall casualties resulting from such incidents, in line with the NRA’s assertion that an armed society is a safer one. Of course, his research on gun-free zones, once again, blatantly omits data in support of the contrary and is carried out through his own nonprofit, the Crime Prevention Research Center. Interestingly, even his research found at least six other mass shootings didn’t occur in areas that banned guns.

Critics also argue that gun violence statistics from 1950 to 1990 are obsolete due to the widespread bans or stringent restrictions on concealed firearms in many states during that time. This would imply that nearly every public mass shooting from those years is considered to have occurred in a gun-free zone. Furthermore, he inaccurately categorizes a considerable number of mass shootings that occurred in regions permitting guns. The best available evidence doesn’t support his claim. A more recent study in 2002 expanded Lott’s analysis and evaluated RTC laws in 25 states from 1977 to 1999. They concluded that “RTC laws do not affect mass public shootings at all. Among the five studies on concealed carry laws and mass shootings recognized by RAND, only Lott's study suggests that shall-issue laws result in fewer mass shootings and casualties. According to an FBI report on 160 active shooting events, only one was halted by a concealed carry permit holder, whereas armed guards, off-duty police, and unarmed civilians stopped more incidents (4, 2, and 21, respectively), further refuting the notion that average armed civilians would be likely to deter mass shootings.

Lott attempted to undermine the FBI's credibility by analyzing active shooter reports from 2014 to 2021. While the FBI found 252 active shootings with 4.4% stopped by an armed civilian, Lott claimed 360 shootings with 34.4% stopped by an armed civilian. He did this by expanding the FBI's definition of an active shooter event, applying it selectively to cases with defensive gun use and excluding thousands without, leading to misleadingly inflated statistics.

Conversely, a study by Everytown for Gun Safety found that between 2009 and 2016, just 10% of mass shootings took place in gun-free zones, as most mass shootings occur in private residences, which aren’t gun-free. This figure is likely too low. The discrepancy often resides in inclusion/exclusion criteria.

Regardless, there is compelling evidence that an armed school is not safer than one without. This misconception has resulted in misguided policy responses to school shootings, exemplified by a North Carolina county's decision to arm all school security guards with AR-15 rifles. We have clear evidence indicating that campus law enforcement has failed to stop the rise of gunfire on school grounds

Of 225 school gunfire incidents identified by The Washington Post from 1999 to 2022, only two saw a school resource officer successfully intervene. In many cases, armed guards were overpowered by well-equipped shooters, indicating their presence did not deter mass shooters.

A study covering 133 K-12 school shootings from 1980 to 2019 found that 1 in 4 incidents had an armed guard, but the presence of armed guards did not reduce casualties; instead, there was a death rate 2.83 times higher in such shootings.

The work of Andrew Kellerman has found that guns in the home are associated with a nearly fivefold increase in the odds of suicide. In another similar study, guns were found four times more likely to cause an accidental shooting, seven times more likely to be used in assault or homicide, and 11 times more likely to be used in a suicide than they were to be used for self-defense. More of the data we have suggests guns are more likely to increase crime, not reduce it.

I could keep going on about John Lott’s unethical behavior, from fabricating whole surveys (and then claiming his hard drive crashed in June of 1997, erasing all evidence of the survey), to his revisionist tendencies, most often with more flawed assertions and questionable data, and even inventing fake identities to defend his work. However, that further detracts from the real issue at hand: failure to enact policies that will further help reduce crime altogether, such as affordable housing, healthcare, paid leave, and yes, sensible gun laws.

This was a very insightful read on an important topic. Loved the heavy statistics.